diff --git a/.github/workflows/build_on_pr.yml b/.github/workflows/build_on_pr.yml

index 5bdadca783b3..27ab7c76aab5 100644

--- a/.github/workflows/build_on_pr.yml

+++ b/.github/workflows/build_on_pr.yml

@@ -91,7 +91,7 @@ jobs:

container:

image: hpcaitech/pytorch-cuda:2.1.0-12.1.0

options: --gpus all --rm -v /dev/shm -v /data/scratch/llama-tiny:/data/scratch/llama-tiny

- timeout-minutes: 60

+ timeout-minutes: 75

defaults:

run:

shell: bash

diff --git a/colossalai/inference/README.md b/colossalai/inference/README.md

index 0bdaf347d295..abecd48865b4 100644

--- a/colossalai/inference/README.md

+++ b/colossalai/inference/README.md

@@ -1,229 +1,194 @@

-# 🚀 Colossal-Inference

+# ⚡️ ColossalAI-Inference

+## 📚 Table of Contents

-## Table of Contents

+- [⚡️ ColossalAI-Inference](#️-colossalai-inference)

+ - [📚 Table of Contents](#-table-of-contents)

+ - [📌 Introduction](#-introduction)

+ - [🛠 Design and Implementation](#-design-and-implementation)

+ - [🕹 Usage](#-usage)

+ - [🪅 Support Matrix](#-support-matrix)

+ - [🗺 Roadmap](#-roadmap)

+ - [🌟 Acknowledgement](#-acknowledgement)

-- [💡 Introduction](#introduction)

-- [🔗 Design](#design)

-- [🔨 Usage](#usage)

- - [Quick start](#quick-start)

- - [Example](#example)

-- [📊 Performance](#performance)

-## Introduction

+## 📌 Introduction

+ColossalAI-Inference is a module which offers acceleration to the inference execution of Transformers models, especially LLMs. In ColossalAI-Inference, we leverage high-performance kernels, KV cache, paged attention, continous batching and other techniques to accelerate the inference of LLMs. We also provide simple and unified APIs for the sake of user-friendliness.

-`Colossal Inference` is a module that contains colossal-ai designed inference framework, featuring high performance, steady and easy usability. `Colossal Inference` incorporated the advantages of the latest open-source inference systems, including LightLLM, TGI, vLLM, FasterTransformer and flash attention. while combining the design of Colossal AI, especially Shardformer, to reduce the learning curve for users.

+## 🛠 Design and Implementation

-## Design

+### :book: Overview

-Colossal Inference is composed of three main components:

+ColossalAI-Inference has **4** major components, namely namely `engine`,`request handler`,`cache manager`, and `modeling`.

-1. High performance kernels and ops: which are inspired from existing libraries and modified correspondingly.

-2. Efficient memory management mechanism:which includes the key-value cache manager, allowing for zero memory waste during inference.

- 1. `cache manager`: serves as a memory manager to help manage the key-value cache, it integrates functions such as memory allocation, indexing and release.

- 2. `batch_infer_info`: holds all essential elements of a batch inference, which is updated every batch.

-3. High-level inference engine combined with `Shardformer`: it allows our inference framework to easily invoke and utilize various parallel methods.

- 1. `HybridEngine`: it is a high level interface that integrates with shardformer, especially for multi-card (tensor parallel, pipline parallel) inference:

- 2. `modeling.llama.LlamaInferenceForwards`: contains the `forward` methods for llama inference. (in this case : llama)

- 3. `policies.llama.LlamaModelInferPolicy` : contains the policies for `llama` models, which is used to call `shardformer` and segmentate the model forward in tensor parallelism way.

+- **Engine**: It orchestrates the inference step. During inference, it recives a request, calls `request handler` to schedule a decoding batch, and executes the model forward pass to perform a iteration. It returns the inference results back to the user at the end.

+- **Request Handler**: It manages requests and schedules a proper batch from exisiting requests.

+- **Cache manager** It is bound within the `request handler`, updates cache blocks and logical block tables as scheduled by the `request handler`.

+- **Modelling**: We rewrite the model and layers of LLMs to simplify and optimize the forward pass for inference.

-## Architecture of inference:

+A high-level view of the inter-component interaction is given below. We would also introduce more details in the next few sections.

-In this section we discuss how the colossal inference works and integrates with the `Shardformer` . The details can be found in our codes.

+

+

+

+

-

+### :mailbox_closed: Engine

+Engine is designed as the entry point where the user kickstarts an inference loop. User can easily instantialize an inference engine with the inference configuration and execute requests. The engine object will expose the following APIs for inference:

-## Roadmap of our implementation

+- `generate`: main function which handles inputs, performs inference and returns outputs

+- `add_request`: add request to the waiting list

+- `step`: perform one decoding iteration. The `request handler` first schedules a batch to do prefill/decoding. Then, it invokes a model to generate a batch of token and afterwards does logit processing and sampling, checks and decodes finished requests.

-- [x] Design cache manager and batch infer state

-- [x] Design TpInference engine to integrates with `Shardformer`

-- [x] Register corresponding high-performance `kernel` and `ops`

-- [x] Design policies and forwards (e.g. `Llama` and `Bloom`)

- - [x] policy

- - [x] context forward

- - [x] token forward

- - [x] support flash-decoding

-- [x] Support all models

- - [x] Llama

- - [x] Llama-2

- - [x] Bloom

- - [x] Chatglm2

-- [x] Quantization

- - [x] GPTQ

- - [x] SmoothQuant

-- [ ] Benchmarking for all models

+### :game_die: Request Handler

-## Get started

+Request handler is responsible for managing requests and scheduling a proper batch from exisiting requests. According to the existing work and experiments, we do believe that it is beneficial to increase the length of decoding sequences. In our design, we partition requests into three priorities depending on their lengths, the longer sequences are first considered.

-### Installation

+

+

+

+

-```bash

-pip install -e .

-```

-

-### Requirements

-

-Install dependencies.

-

-```bash

-pip install -r requirements/requirements-infer.txt

-

-# if you want use smoothquant quantization, please install torch-int

-git clone --recurse-submodules https://github.com/Guangxuan-Xiao/torch-int.git

-cd torch-int

-git checkout 65266db1eadba5ca78941b789803929e6e6c6856

-pip install -r requirements.txt

-source environment.sh

-bash build_cutlass.sh

-python setup.py install

-```

+### :radio: KV cache and cache manager

-### Docker

+We design a unified block cache and cache manager to allocate and manage memory. The physical memory is allocated before decoding and represented by a logical block table. During decoding process, cache manager administrates the physical memory through `block table` and other components(i.e. engine) can focus on the lightweight `block table`. More details are given below.

-You can use docker run to use docker container to set-up environment

+- `cache block`: We group physical memory into different memory blocks. A typical cache block is shaped `(num_kv_heads, head_size, block_size)`. We determine the block number beforehand. The memory allocation and computation are executed at the granularity of memory block.

+- `block table`: Block table is the logical representation of cache blocks. Concretely, a block table of a single sequence is a 1D tensor, with each element holding a block ID. Block ID of `-1` means "Not Allocated". In each iteration, we pass through a batch block table to the corresponding model.

-```

-# env: python==3.8, cuda 11.6, pytorch == 1.13.1 triton==2.0.0.dev20221202, vllm kernels support, flash-attention-2 kernels support

-docker pull hpcaitech/colossalai-inference:v2

-docker run -it --gpus all --name ANY_NAME -v $PWD:/workspace -w /workspace hpcaitech/colossalai-inference:v2 /bin/bash

-

-# enter into docker container

-cd /path/to/ColossalAI

-pip install -e .

-

-```

+

+

+

+

+ Example of Batch Block Table

+

+

-## Usage

-### Quick start

-example files are in

+### :railway_car: Modeling

-```bash

-cd ColossalAI/examples

-python hybrid_llama.py --path /path/to/model --tp_size 2 --pp_size 2 --batch_size 4 --max_input_size 32 --max_out_len 16 --micro_batch_size 2

-```

+Modeling contains models and layers, which are hand-crafted for better performance easier usage. Deeply integrated with `shardformer`, we also construct policy for our models. In order to minimize users' learning costs, our models are aligned with [Transformers](https://github.com/huggingface/transformers)

+## 🕹 Usage

+### :arrow_right: Quick Start

-### Example

```python

-# import module

-from colossalai.inference import CaiInferEngine

+import torch

+import transformers

import colossalai

-from transformers import LlamaForCausalLM, LlamaTokenizer

+from colossalai.inference import InferenceEngine, InferenceConfig

+from pprint import pprint

-#launch distributed environment

colossalai.launch_from_torch()

-# load original model and tokenizer

-model = LlamaForCausalLM.from_pretrained("/path/to/model")

-tokenizer = LlamaTokenizer.from_pretrained("/path/to/model")

-

-# generate token ids

-input = ["Introduce a landmark in London","Introduce a landmark in Singapore"]

-data = tokenizer(input, return_tensors='pt')

-

-# set parallel parameters

-tp_size=2

-pp_size=2

-max_output_len=32

-micro_batch_size=1

-

-# initial inference engine

-engine = CaiInferEngine(

- tp_size=tp_size,

- pp_size=pp_size,

- model=model,

- max_output_len=max_output_len,

- micro_batch_size=micro_batch_size,

-)

-

-# inference

-output = engine.generate(data)

-

-# get results

-if dist.get_rank() == 0:

- assert len(output[0]) == max_output_len, f"{len(output)}, {max_output_len}"

-

+# Step 1: create a model in "transformers" way

+model_path = "lmsys/vicuna-7b-v1.3"

+model = transformers.LlamaForCausalLM.from_pretrained(model_path).cuda()

+tokenizer = transformers.AutoTokenizer.from_pretrained(model_path)

+

+# Step 2: create an inference_config

+inference_config = InferenceConfig(

+ dtype=torch.float16,

+ max_batch_size=4,

+ max_input_len=1024,

+ max_output_len=512,

+ use_cuda_kernel=True,

+ use_cuda_graph=False, # Turn on if you want to use CUDA Graph to accelerate inference

+ )

+

+# Step 3: create an engine with model and config

+engine = InferenceEngine(model, tokenizer, inference_config, verbose=True)

+

+# Step 4: try inference

+prompts = ['Who is the best player in the history of NBA?']

+response = engine.generate(prompts)

+pprint(response)

```

-## Performance

-

-### environment:

-

-We conducted multiple benchmark tests to evaluate the performance. We compared the inference `latency` and `throughputs` between `colossal-inference` and original `hugging-face torch fp16`.

-

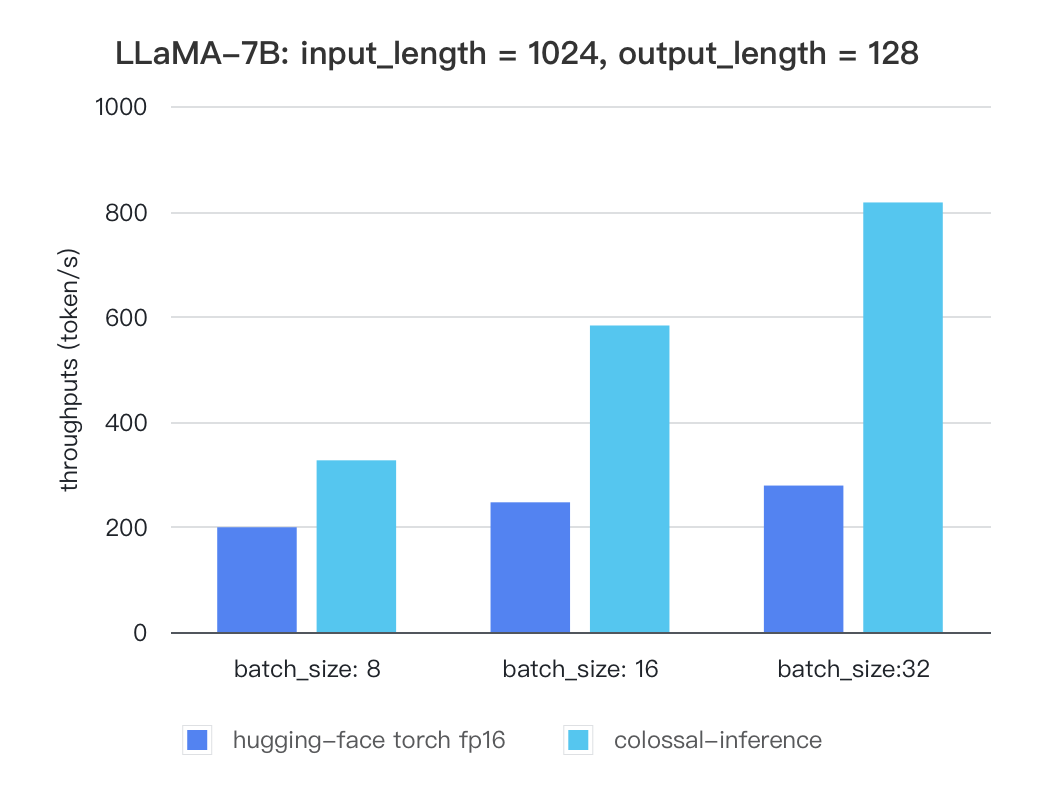

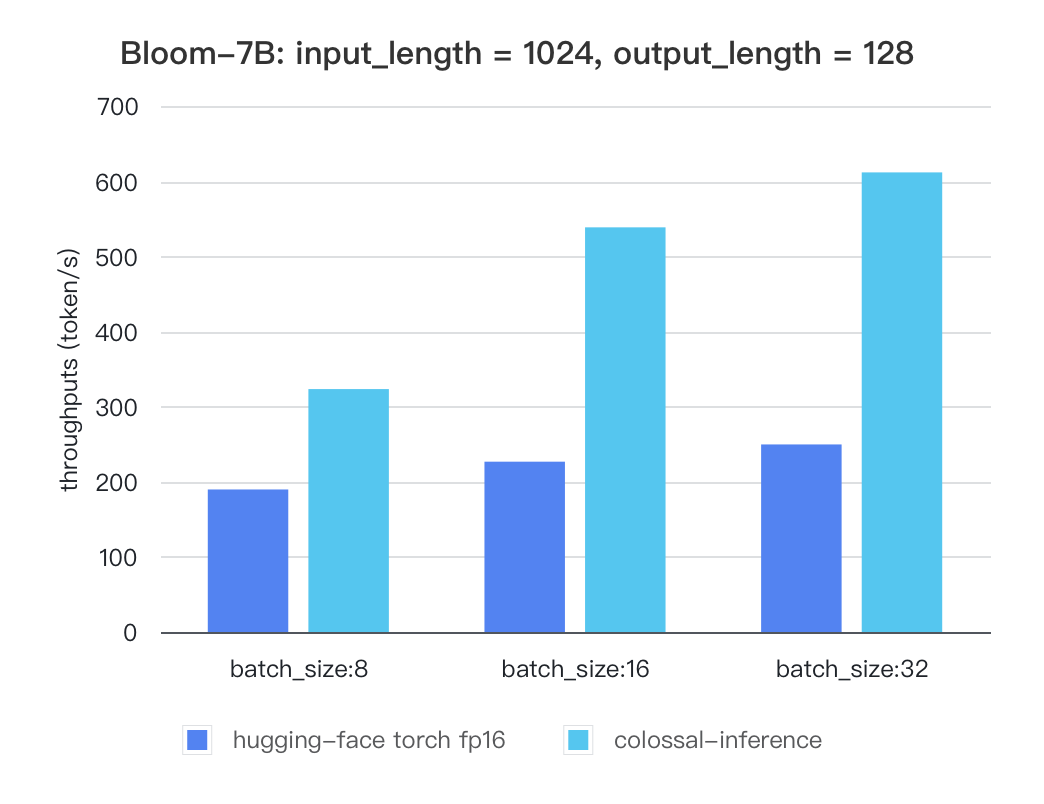

-For various models, experiments were conducted using multiple batch sizes under the consistent model configuration of `7 billion(7b)` parameters, `1024` input length, and 128 output length. The obtained results are as follows (due to time constraints, the evaluation has currently been performed solely on the `A100` single GPU performance; multi-GPU performance will be addressed in the future):

-

-### Single GPU Performance:

-

-Currently the stats below are calculated based on A100 (single GPU), and we calculate token latency based on average values of context-forward and decoding forward process, which means we combine both of processes to calculate token generation times. We are actively developing new features and methods to further optimize the performance of LLM models. Please stay tuned.

-

-### Tensor Parallelism Inference

-

-##### Llama

+### :bookmark: Customize your inference engine

+Besides the basic quick-start inference, you can also customize your inference engine via modifying config or upload your own model or decoding components (logit processors or sampling strategies).

-| batch_size | 8 | 16 | 32 |

-|:-----------------------:|:------:|:------:|:------:|

-| hugging-face torch fp16 | 199.12 | 246.56 | 278.4 |

-| colossal-inference | 326.4 | 582.72 | 816.64 |

+#### Inference Config

+Inference Config is a unified api for generation process. You can define the value of args to control the generation, like `max_batch_size`,`max_output_len`,`dtype` to decide the how many sequences can be handled at a time, and how many tokens to output. Refer to the source code for more detail.

-

-

-#### Bloom

-

-| batch_size | 8 | 16 | 32 |

-|:-----------------------:|:------:|:------:|:------:|

-| hugging-face torch fp16 | 189.68 | 226.66 | 249.61 |

-| colossal-inference | 323.28 | 538.52 | 611.64 |

-

-

-

-

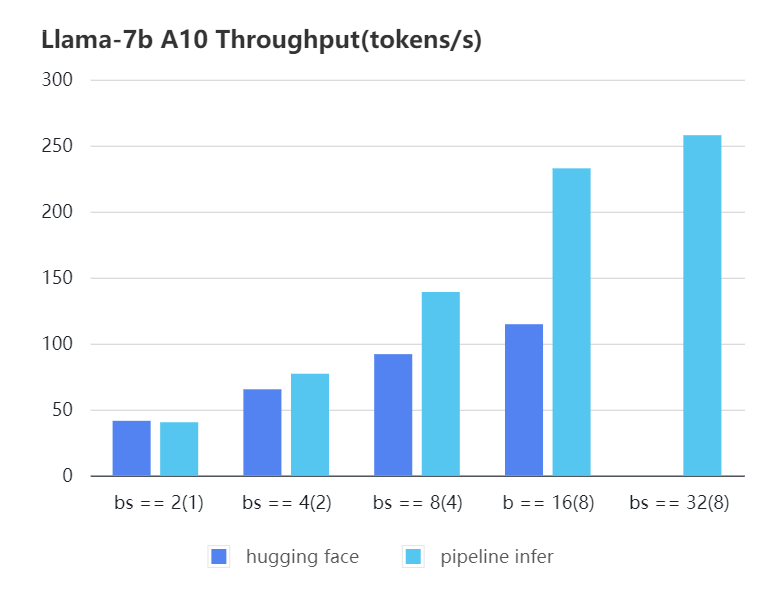

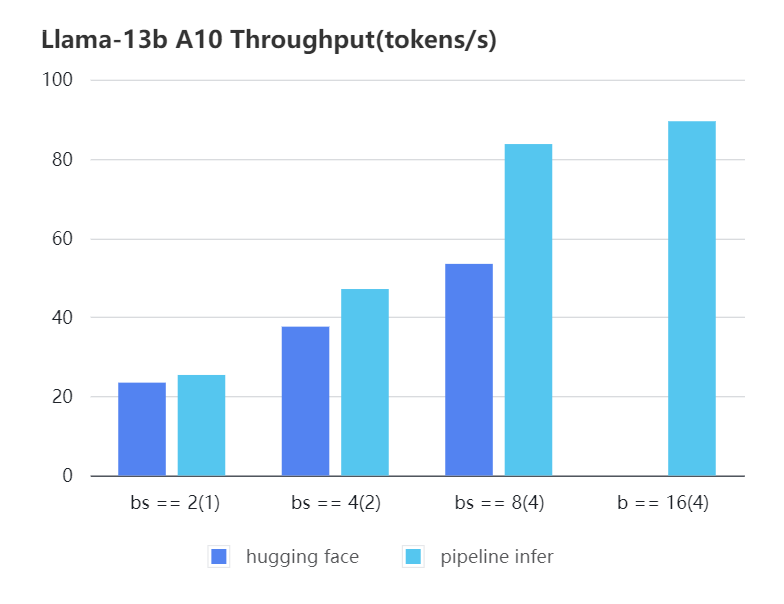

-### Pipline Parallelism Inference

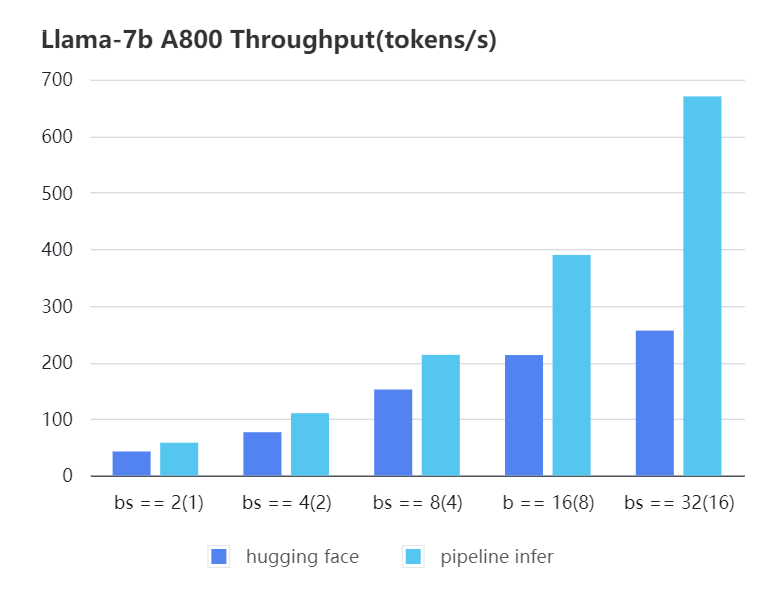

-We conducted multiple benchmark tests to evaluate the performance. We compared the inference `latency` and `throughputs` between `Pipeline Inference` and `hugging face` pipeline. The test environment is 2 * A10, 20G / 2 * A800, 80G. We set input length=1024, output length=128.

-

-

-#### A10 7b, fp16

-

-| batch_size(micro_batch size) | 2(1) | 4(2) | 8(4) | 16(8) | 32(8) | 32(16) |

-|:----------------------------:|:-----:|:-----:|:------:|:------:|:------:|:------:|

-| Pipeline Inference | 40.35 | 77.10 | 139.03 | 232.70 | 257.81 | OOM |

-| Hugging Face | 41.43 | 65.30 | 91.93 | 114.62 | OOM | OOM |

-

-

-

-

-#### A10 13b, fp16

-

-| batch_size(micro_batch size) | 2(1) | 4(2) | 8(4) | 16(4) |

-|:----------------------------:|:-----:|:-----:|:-----:|:-----:|

-| Pipeline Inference | 25.39 | 47.09 | 83.7 | 89.46 |

-| Hugging Face | 23.48 | 37.59 | 53.44 | OOM |

-

-

-

-

-#### A800 7b, fp16

-

-| batch_size(micro_batch size) | 2(1) | 4(2) | 8(4) | 16(8) | 32(16) |

-|:----------------------------:|:-----:|:------:|:------:|:------:|:------:|

-| Pipeline Inference | 57.97 | 110.13 | 213.33 | 389.86 | 670.12 |

-| Hugging Face | 42.44 | 76.5 | 151.97 | 212.88 | 256.13 |

-

-

-

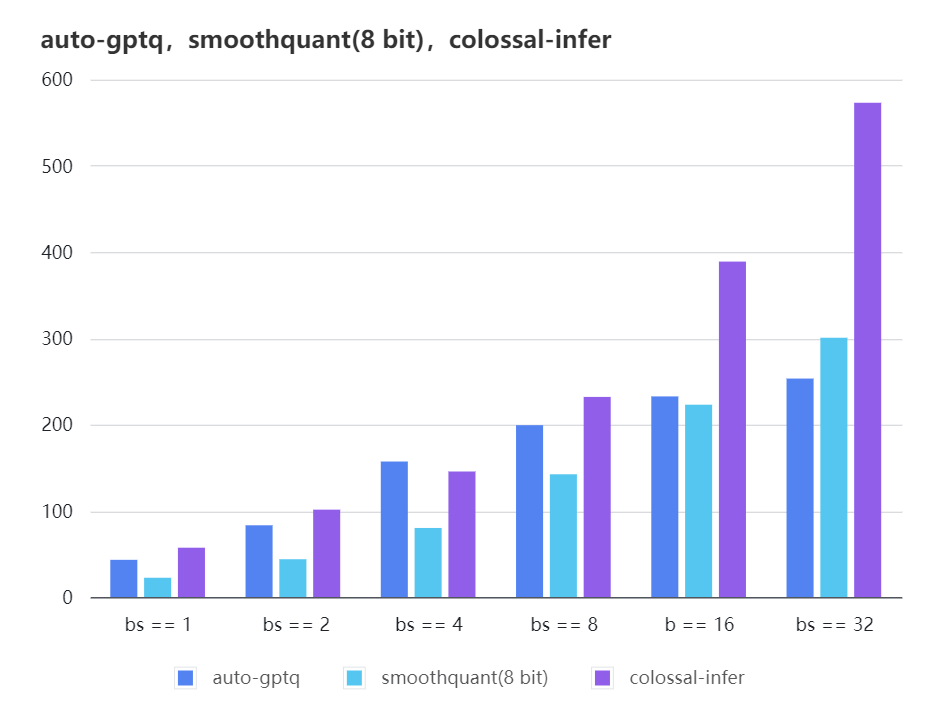

-### Quantization LLama

-

-| batch_size | 8 | 16 | 32 |

-|:-------------:|:------:|:------:|:------:|

-| auto-gptq | 199.20 | 232.56 | 253.26 |

-| smooth-quant | 142.28 | 222.96 | 300.59 |

-| colossal-gptq | 231.98 | 388.87 | 573.03 |

-

-

+#### Generation Config

+In colossal-inference, Generation config api is inherited from [Transformers](https://github.com/huggingface/transformers). Usage is aligned. By default, it is automatically generated by our system and you don't bother to construct one. If you have such demand, you can also create your own and send it to your engine.

+#### Logit Processors

+The `Logit Processosr` receives logits and return processed results. You can take the following step to make your own.

+```python

+@register_logit_processor("name")

+def xx_logit_processor(logits, args):

+ logits = do_some_process(logits)

+ return logits

+```

-The results of more models are coming soon!

+#### Sampling Strategies

+We offer 3 main sampling strategies now (i.e. `greedy sample`, `multinomial sample`, `beam_search sample`), you can refer to [sampler](/ColossalAI/colossalai/inference/sampler.py) for more details. We would strongly appreciate if you can contribute your varities.

+

+## 🪅 Support Matrix

+

+| Model | KV Cache | Paged Attention | Kernels | Tensor Parallelism | Speculative Decoding |

+| - | - | - | - | - | - |

+| Llama | ✅ | ✅ | ✅ | 🔜 | ✅ |

+

+

+Notations:

+- ✅: supported

+- ❌: not supported

+- 🔜: still developing, will support soon

+

+## 🗺 Roadmap

+

+- [x] KV Cache

+- [x] Paged Attention

+- [x] High-Performance Kernels

+- [x] Llama Modelling

+- [x] User Documentation

+- [x] Speculative Decoding

+- [ ] Tensor Parallelism

+- [ ] Beam Search

+- [ ] Early stopping

+- [ ] Logger system

+- [ ] SplitFuse

+- [ ] Continuous Batching

+- [ ] Online Inference

+- [ ] Benchmarking

+

+## 🌟 Acknowledgement

+

+This project was written from scratch but we learned a lot from several other great open-source projects during development. Therefore, we wish to fully acknowledge their contribution to the open-source community. These projects include

+

+- [vLLM](https://github.com/vllm-project/vllm)

+- [LightLLM](https://github.com/ModelTC/lightllm)

+- [flash-attention](https://github.com/Dao-AILab/flash-attention)

+

+If you wish to cite relevant research papars, you can find the reference below.

+

+```bibtex

+# vllm

+@inproceedings{kwon2023efficient,

+ title={Efficient Memory Management for Large Language Model Serving with PagedAttention},

+ author={Woosuk Kwon and Zhuohan Li and Siyuan Zhuang and Ying Sheng and Lianmin Zheng and Cody Hao Yu and Joseph E. Gonzalez and Hao Zhang and Ion Stoica},

+ booktitle={Proceedings of the ACM SIGOPS 29th Symposium on Operating Systems Principles},

+ year={2023}

+}

+

+# flash attention v1 & v2

+@inproceedings{dao2022flashattention,

+ title={Flash{A}ttention: Fast and Memory-Efficient Exact Attention with {IO}-Awareness},

+ author={Dao, Tri and Fu, Daniel Y. and Ermon, Stefano and Rudra, Atri and R{\'e}, Christopher},

+ booktitle={Advances in Neural Information Processing Systems},

+ year={2022}

+}

+@article{dao2023flashattention2,

+ title={Flash{A}ttention-2: Faster Attention with Better Parallelism and Work Partitioning},

+ author={Dao, Tri},

+ year={2023}

+}

+

+# we do not find any research work related to lightllm

+```

diff --git a/colossalai/inference/__init__.py b/colossalai/inference/__init__.py

index a95205efaa78..5f2effca65a0 100644

--- a/colossalai/inference/__init__.py

+++ b/colossalai/inference/__init__.py

@@ -1,4 +1,4 @@

-from .engine import InferenceEngine

-from .engine.policies import BloomModelInferPolicy, ChatGLM2InferPolicy, LlamaModelInferPolicy

+from .config import InferenceConfig

+from .core import InferenceEngine

-__all__ = ["InferenceEngine", "LlamaModelInferPolicy", "BloomModelInferPolicy", "ChatGLM2InferPolicy"]

+__all__ = ["InferenceConfig", "InferenceEngine"]

diff --git a/colossalai/inference/batch_bucket.py b/colossalai/inference/batch_bucket.py

new file mode 100644

index 000000000000..f8571c0ca030

--- /dev/null

+++ b/colossalai/inference/batch_bucket.py

@@ -0,0 +1,523 @@

+from typing import Callable, List, Optional, Tuple, Union

+

+import torch

+

+from colossalai.inference.struct import Sequence

+from colossalai.utils import get_current_device

+

+

+class BatchBucket:

+ """Container for a batch of Sequences, which is used to manage the batch of sequences.

+

+ Attrs:

+ _sequences_dict (Dict[int, Sequence]): Map sequence uid to sequence struct

+ seq_uid -> Sequence

+ _sequences_indexes (Dict[int, int]): Map sequence uid to index in the batch

+ seq_uid -> index in the batch (indexing used in sequence_lengths and block_tables)

+ _sequence_lengths (torch.Tensor): Length of each sequence in the batch.

+ The size of the tensor is (max_batch_size,)

+ _block_tables (torch.Tensor): Block table of each sequence in the batch

+ The size of the tensor is (max_batch_size, max_blocks_per_seq)

+ """

+

+ def __init__(

+ self,

+ num_heads,

+ head_dim,

+ max_batch_size,

+ max_length,

+ block_size,

+ kv_max_split_num,

+ fd_interm_tensor=None,

+ device=None,

+ dtype=torch.float16,

+ ):

+ self.num_heads = num_heads

+ self.head_dim = head_dim

+ self.max_batch_size = max_batch_size

+ self.max_length = max_length # in + out len

+ self.block_size = block_size

+ self.kv_max_split_num = kv_max_split_num # Hint used for flash decoding

+ self.fd_interm_tensor = fd_interm_tensor

+ self.device = device or get_current_device()

+ self.dtype = dtype

+

+ self._use_spec_dec = False

+ self._num_tokens_to_verify = None

+

+ self._current_batch_size = 0

+ self._sequences_dict = dict()

+ self._sequences_indexes = dict() # deque(maxlen=self.max_batch_size)

+ self._sequence_lengths = torch.zeros((self.max_batch_size,), dtype=torch.int32)

+ self._sequence_lengths_helper = torch.zeros_like(self._sequence_lengths)

+ max_blocks_per_seq = (self.max_length + block_size - 1) // block_size

+ self._block_tables = torch.full((self.max_batch_size, max_blocks_per_seq), -1, dtype=torch.int32)

+ self._block_tables_helper = torch.full_like(self._block_tables, -1)

+

+ @property

+ def is_empty(self):

+ return self._current_batch_size == 0

+

+ @property

+ def current_batch_size(self):

+ return self._current_batch_size

+

+ def __len__(self):

+ return self._current_batch_size

+

+ @property

+ def available_batch_size(self):

+ return self.max_batch_size - self._current_batch_size

+

+ @property

+ def block_tables(self):

+ return self._block_tables

+

+ @property

+ def seq_lengths(self):

+ return self._sequence_lengths

+

+ @property

+ def seqs_ids(self):

+ return list(self._sequences_dict.keys())

+

+ @property

+ def seqs_li(self):

+ return list(self._sequences_dict.values())

+

+ @property

+ def is_compact(self):

+ assert len(self._sequences_dict) == len(self._sequences_indexes), "BatchBucket indexing is not consistent"

+ return (

+ len(self._sequences_dict)

+ == torch.nonzero(self._sequence_lengths).view(-1).numel()

+ == torch.nonzero(self._block_tables[:, 0] >= 0).numel()

+ )

+

+ @property

+ def use_spec_dec(self) -> bool:

+ return self._use_spec_dec

+

+ @property

+ def num_tokens_to_verify(self) -> int:

+ return self._num_tokens_to_verify

+

+ @property

+ def batch_token_ids(self) -> List[List[int]]:

+ out = []

+ for seq in self.seqs_li:

+ out.append(seq.input_token_id + seq.output_token_id)

+ return out

+

+ def set_use_spec_dec(self, num_tokens_to_verify: int = 5) -> None:

+ """Set batch bucket to use speculatvie decoding.

+ This will notify the adjust the lengths of inputs during modeling,

+ and let the main model verifies tokens in parallel.

+ """

+ self._use_spec_dec = True

+ self._num_tokens_to_verify = num_tokens_to_verify

+

+ def reset_use_spec_dec(self) -> None:

+ """Reset the usage of speculative decoding for the batch bucket"""

+ self._use_spec_dec = False

+ self._num_tokens_to_verify = None

+

+ def _make_compact(self) -> None:

+ # Clean and Compress the batch based on its sequences dict.

+ # Namely,compress sequences to the front and clean the seq lengths and block tables tensors.

+ # NOTE Prevent calling this method multiple times in a single step

+ if self.is_compact:

+ return

+ valid_seq_ids = self._sequences_dict.keys()

+ valid_num = len(valid_seq_ids)

+ valid_indexes = [self._sequences_indexes[seq_id] for seq_id in valid_seq_ids]

+ assert valid_num == len(self._sequences_indexes), "BatchBucket indexing is not consistent"

+ self._sequence_lengths_helper[:valid_num] = self._sequence_lengths[valid_indexes]

+ self._sequence_lengths[:] = self._sequence_lengths_helper[:]

+ self._block_tables_helper[:valid_num, :] = self.block_tables[valid_indexes]

+ self.block_tables[:] = self._block_tables_helper[:]

+ new_idx = 0

+ for seq_id in valid_seq_ids:

+ self._sequences_indexes[seq_id] = new_idx

+ new_idx += 1

+ self._sequence_lengths_helper.fill_(0)

+ self._block_tables_helper.fill_(-1)

+ self._current_batch_size = valid_num

+

+ def add_seq(

+ self,

+ seq: Sequence,

+ alloc_block_table: torch.Tensor = None,

+ alloc_block_table_fn: Callable[[torch.Tensor, int], None] = None,

+ ) -> Union[torch.Tensor, None]:

+ """Add a single sequence to the batch.

+ User could opt to provide either a block table or a function to allocate block tables.

+

+ Args:

+ seq (Sequence): The sequence to be added to the batch

+ alloc_block_table (torch.Tensor): The block tables to be copied and used for the sequence

+ alloc_block_table_fn (Callable[[torch.Tensor, int], None]): The function to allocate blocks for the sequence,

+ which is expected to reserve blocks and update status of kv-cache manager.

+

+ Returns:

+ block_table (torch.Tensor): The block table of the added sequence, used for block allocation in kv-cache manager.

+ None if the sequence cannot be added.

+ """

+ block_table = None

+ # TODO might consider sorting by length

+ if self._current_batch_size < self.max_batch_size:

+ self._sequences_dict[seq.request_id] = seq

+ self._sequences_indexes[seq.request_id] = self._current_batch_size

+ self._sequence_lengths[self._current_batch_size] = seq.sentence_len

+ # NOTE the added seq still require block table allocation by kvcache manager

+ block_table = self._block_tables[self._current_batch_size - 1]

+ if alloc_block_table is not None:

+ # copy block ids from provided block tables

+ self._block_tables[self._current_batch_size - 1] = alloc_block_table

+ elif alloc_block_table_fn:

+ alloc_block_table_fn(block_table, self._sequence_lengths[self._current_batch_size - 1].item())

+ self._current_batch_size += 1

+ return block_table

+

+ def add_seqs(

+ self,

+ seqs: List[Sequence],

+ alloc_block_tables: torch.Tensor = None,

+ alloc_block_tables_fn: Callable[[torch.Tensor, torch.Tensor], None] = None,

+ ) -> Union[torch.Tensor, None]:

+ """Add a list of sequences to the batch.

+ User could opt to provide either block tables or a function to allocate block tables.

+

+ Args:

+ seqs (List[Sequence]): The sequences to be added to the batch

+ alloc_block_tables (torch.Tensor): The block tables to be copied and used for the sequence

+ alloc_block_table_fn (Callable[[torch.Tensor, torch.Tensor], None]): The function to allocate blocks for multiple sequences,

+ which is expected to reserve blocks and update status of kv-cache manager.

+

+ Returns:

+ block_tables (torch.Tensor): The block tables of the added sequences, used for block allocation in kv-cache manager.

+ None if the sequences cannot be added.

+ """

+

+ assert (

+ alloc_block_tables is None or alloc_block_tables_fn is None

+ ), "`alloc_block_tables` and `alloc_block_tables_fn` cannot be provided at the same time"

+

+ num_seqs_to_add = min(self.max_batch_size - self._current_batch_size, len(seqs))

+ block_tables = None

+ if num_seqs_to_add > 0:

+ for i, seq in enumerate(seqs[:num_seqs_to_add]):

+ self._sequences_dict[seq.request_id] = seq

+ self._sequences_indexes[seq.request_id] = self._current_batch_size + i

+ # TODO external (rename): modify Sequence.sentence_len to seq_len

+ self._sequence_lengths[

+ self._current_batch_size : self._current_batch_size + num_seqs_to_add

+ ] = torch.tensor([seq.sentence_len for seq in seqs[:num_seqs_to_add]], dtype=torch.int32)

+ # NOTE block tables to be updated by kvcache manager

+ block_tables = self._block_tables[self._current_batch_size : self._current_batch_size + num_seqs_to_add]

+ if alloc_block_tables is not None:

+ # copy block ids from provided block tables

+ self._block_tables[

+ self._current_batch_size : self._current_batch_size + num_seqs_to_add

+ ] = alloc_block_tables

+ elif alloc_block_tables_fn:

+ alloc_block_tables_fn(

+ block_tables,

+ self._sequence_lengths[self._current_batch_size : self._current_batch_size + num_seqs_to_add],

+ )

+

+ self._current_batch_size += num_seqs_to_add

+ seqs[:] = seqs[num_seqs_to_add:]

+

+ return block_tables

+

+ def pop_seq_update_batch(

+ self, request_id: int, free_block_table_fn: Callable[[torch.Tensor], None] = None

+ ) -> Tuple[Sequence, Union[torch.Tensor, None]]:

+ """Pop a single sequence by id from the batch, and update the batch bucket status.

+

+ Args:

+ request_id (int): The uid of the sequence

+ free_block_table_fn (Callable): The function to free the block table of a sequence,

+ if not provided, then we have to release the block table manually after calling this method

+

+ Returns:

+ A tuple of: seq (Sequence): The target sequence

+ and block_table (torch.Tensor): block table of the target sequence indicating corresponding blocks,

+ none if the sequence is not found or free_block_table_fn is provided.

+ """

+ seq: Sequence = self._sequences_dict.get(request_id)

+ block_table = None

+ if seq is not None:

+ assert request_id in self._sequences_indexes, "Inconsistency in BatchBucket indexing"

+ self._sequences_dict.pop(request_id)

+ seq_b_idx = self._sequences_indexes.get(request_id)

+

+ if self.current_batch_size > 1:

+ # replace seq length of the target seq with that of the last seq in the batch

+ last_seq_b_idx = self.current_batch_size - 1

+ last_seq_id = next(

+ (uid for uid, index in self._sequences_indexes.items() if index == last_seq_b_idx),

+ None,

+ )

+ assert last_seq_id is not None

+ self._sequences_indexes[last_seq_id] = seq_b_idx

+ self._sequence_lengths[seq_b_idx] = self._sequence_lengths[last_seq_b_idx]

+ self._sequence_lengths[last_seq_b_idx].fill_(0)

+ # free the block table of the seq, or return a copy of the block table (to be processed outside)

+ if free_block_table_fn:

+ free_block_table_fn(self._block_tables[seq_b_idx])

+ else:

+ block_table = self._block_tables[seq_b_idx].detach().clone()

+ # replace block table of the target seq with that of the last seq in the batch

+ self._block_tables[seq_b_idx] = self._block_tables[last_seq_b_idx]

+ self._block_tables[last_seq_b_idx].fill_(-1)

+ else:

+ if free_block_table_fn:

+ free_block_table_fn(self._block_tables[0])

+ else:

+ block_table = self._block_tables[0].detach().clone()

+ self._sequence_lengths[0].fill_(0)

+ self._block_tables[0].fill_(-1)

+ self._sequences_indexes.pop(request_id)

+ self._current_batch_size -= 1

+

+ return seq, block_table

+

+ def pop_seqs(

+ self, request_ids: List[int], free_block_table_fn: Callable[[torch.Tensor], None] = None

+ ) -> Tuple[List[Sequence], List[torch.Tensor]]:

+ """Iteratively pop a list of sequences by uid.

+

+ Args:

+ request_ids (List[int]): The uids of the sequences

+ free_block_table_fn (Callable): The function to free the block table of a sequence,

+ if not provided, then we have to release the block table manually after calling this method

+ Returns:

+ A tuple of: seqs (List[Sequence]): The target sequences

+ and block_tables (List[torch.Tensor]): block tables of the target sequences indicating corresponding blocks

+ """

+ seqs = []

+ block_tables = []

+ for request_id in request_ids:

+ seq, block_table = self.pop_seq_update_batch(request_id, free_block_table_fn)

+ if seq is not None:

+ seqs.append(seq)

+ if block_table is not None:

+ block_tables.append(block_table)

+ return seqs, block_tables

+

+ def pop_n_seqs(

+ self, n: int, free_block_table_fn: Callable[[torch.Tensor], None] = None

+ ) -> Tuple[List[Sequence], List[torch.Tensor]]:

+ """Pop the first n sequences in the batch (FIFO).

+ If n is greater than the current batch szie, pop all the sequences in the batch.

+

+ Args:

+ n (int): The number of sequences to pop out

+ free_block_table_fn (Callable): The function to free the block table of a single sequence

+ Returns:

+ A tuple of: seqs (List[Sequence]): The target sequences,

+ and block_tables (List[torch.Tensor]): block tables of the target sequences indicating corresponding blocks

+ """

+ # NOTE Prevent calling this method multiple times in a single step

+ seqs = []

+ block_tables = []

+ n = min(n, self.current_batch_size)

+ seq_ids = list(self._sequences_dict.keys())[:n]

+ for seq_id in seq_ids:

+ seq = self._sequences_dict.pop(seq_id)

+ seq_b_idx = self._sequences_indexes.pop(seq_id)

+ if free_block_table_fn:

+ free_block_table_fn(self.block_tables[seq_b_idx])

+ else:

+ block_tables.append(self.block_tables[seq_b_idx].detach().clone())

+ seqs.append(seq)

+ if not self.is_compact:

+ self._make_compact()

+

+ return seqs, block_tables

+

+ def pop_finished(

+ self, free_block_table_fn: Callable[[torch.Tensor], None] = None

+ ) -> Tuple[List[Sequence], List[torch.Tensor]]:

+ """Pop finished sequences in the batch and a list of block tables of the finished sequences,

+ if free_block_table_fn is not provided.

+

+ Args:

+ free_block_table_fn (Callable): The function to free the block table of a single sequence

+ Returns:

+ A tuple of: finished_seqs (List[Sequence]): The finished sequences,

+ and finished_block_tables (List[torch.Tensor]): block tables of the finished sequences.

+ """

+ finished_seqs = []

+ finished_block_tables = []

+ for seq in self._sequences_dict.values():

+ if seq.check_finish():

+ finished_seqs.append(seq)

+ # Use `pop_seq_update_batch`` to update the batch status for just a few of finished seqs,

+ # otherwise, pop seqs directly and then call `_make_compact` to compress the batch.

+ # For now, the performance difference is not significant, so we use the frist method to pop seqs.

+ # Precise evaluations to be done.

+ for seq in finished_seqs:

+ _, block_table = self.pop_seq_update_batch(seq.request_id, free_block_table_fn)

+ if block_table is not None:

+ finished_block_tables.append(block_table)

+

+ return finished_seqs, finished_block_tables

+

+ # TODO arg type not support beam search sampling yet

+ def append_batch_tokens(self, tokens: torch.Tensor) -> None:

+ """Append a batch of tokens to the sequences in the batch"""

+ assert self.current_batch_size == tokens.size(0), "Batch size mismatch"

+

+ if self.current_batch_size > 0:

+ tokens = tokens.tolist()

+ for seq_id, seq in self._sequences_dict.items():

+ index_in_b = self._sequences_indexes[seq_id]

+ curr_tokens = tokens[index_in_b]

+ if not isinstance(curr_tokens, list):

+ curr_tokens = [curr_tokens]

+ seq.output_token_id += curr_tokens

+ seq.check_finish()

+ self._sequence_lengths[: self.current_batch_size] += 1

+

+ def revoke_batch_tokens(self, n_tokens: int, n_seqs: int = 1) -> None:

+ """Revoke the last n output tokens of the sequences in the batch

+

+ Args:

+ n_tokens (int): The number of output tokens to revoke from each sequence.

+ It does not count in the context tokens (input tokens).

+ n_seqs (int): The first n sequences to revoke tokens from. Defaults to 1.

+ For now, speculative decoding only supports batch size 1.

+ """

+ if n_tokens >= 1:

+ seqs_iter = iter(self._sequences_dict.items())

+ for _ in range(n_seqs):

+ seq_id, seq = next(seqs_iter)

+ assert seq.output_len >= n_tokens, "Revoking len exceeds the current output len of the sequence"

+ seq.output_token_id = seq.output_token_id[:-n_tokens]

+ seq.revoke_finished_status()

+ self._sequence_lengths[self._sequences_indexes[seq_id]] -= n_tokens

+

+ def clear(self, free_block_tables_fn: Optional[Callable[[torch.Tensor], None]]) -> List[int]:

+ """Clear all the sequences in the batch.

+

+ free_block_tables_fn (Optional[Callable]): The function to free the block tables of all the sequences in a batch

+ """

+ seqs = list(self._sequences_dict.values())

+ self._sequences_dict.clear()

+ self._sequences_indexes.clear()

+ if free_block_tables_fn:

+ free_block_tables_fn(self.block_tables, self._current_batch_size)

+ self._block_tables.fill_(-1)

+ self._sequence_lengths.fill_(0)

+ self._current_batch_size = 0

+ return seqs

+

+ def merge(self, other: "BatchBucket") -> List[int]:

+ """Merge the sequences in the other batch into the current batch.

+ Merge as possible as the current batch can, if it does not have available spaces

+ holding all the sequences in the other batch

+

+ Usage:

+ > New incoming sequence added to prefil batch

+ prefill bb curr batch size < prefil_ratio * prefill bb max batch size

+ > New incoming sequence added to prefil batch

+ prefill bb curr batch size == prefil_ratio * prefill bb max batch size

+ > Pause Decoding

+ > Prefill

+ > Move sequences in prefill bb => decoding bb

+ > Put back the out-of-volume sequences into the running pool

+

+ Returns:

+ unmerged_ids (List[int]): a list of sequence uids that are not merged into the current batch

+ """

+ unmerged_ids = []

+ num_seqs_to_merge = min(self.available_batch_size, other.current_batch_size)

+ if num_seqs_to_merge > 0:

+ seqs, block_tables_li = other.pop_n_seqs(num_seqs_to_merge)

+ block_tables = torch.stack(block_tables_li)

+ self.add_seqs(seqs, alloc_block_tables=block_tables)

+ unmerged_ids = other.seqs_ids

+

+ return unmerged_ids

+

+ ########## The following methods are expected to be used in modeling ###########

+

+ # For compatibility.

+ # NOTE: This is an assumption way to determine the stage of the batch.

+ @property

+ def is_prompts(self) -> bool:

+ assert len(self._sequences_dict) > 0, "No sequence in the batch"

+ first_seq = next(iter(self._sequences_dict.values()))

+ if first_seq.output_len == 0:

+ return True

+ return False

+

+ def get_1D_inputs_spec_dec(self, n: int) -> torch.Tensor:

+ # Used for main model verification in **Decoding Stage**

+ # `n` is the number of tokens to be verified,

+ # and so that prepare the last `n` tokens of each sequence as the inputs

+ assert len(self._sequences_dict) > 0, "No sequence in the batch"

+ assert all(

+ seq.output_len >= n for seq in self._sequences_dict.values()

+ ), "Sequence output tokens must be greater than or equal to the number of tokens to be verified."

+ out_li = []

+ seq_ids = sorted(self._sequences_indexes.keys(), key=lambda x: self._sequences_indexes[x])

+ for seq_id in seq_ids:

+ seq: Sequence = self._sequences_dict[seq_id]

+ out_li.extend(seq.output_token_id[-n:])

+ return torch.tensor(out_li, dtype=torch.long, device=self.device)

+

+ # For compatibility

+ def get_1D_inputs(self) -> torch.Tensor:

+ assert len(self._sequences_dict) > 0, "No sequence in the batch"

+ first_seq = next(iter(self._sequences_dict.values())) # not exactly the first sequence

+ if first_seq.output_len == 0:

+ # Assume prefill stage

+ assert all(

+ seq.output_len == 0 for seq in self._sequences_dict.values()

+ ), "Sequence stage (Prefill/Decoding) must be the same in the batch"

+ out_li = []

+ seq_ids = sorted(self._sequences_indexes.keys(), key=lambda x: self._sequences_indexes[x])

+ for seq_id in seq_ids:

+ seq: Sequence = self._sequences_dict[seq_id]

+ out_li.extend(seq.input_token_id)

+ return torch.tensor(out_li, dtype=torch.long, device=self.device)

+ else:

+ # Assume decoding stage

+ if self.use_spec_dec:

+ # For Speculative Decoding

+ # the number of tokens to be verified in parallel plus the correct token in the last step

+ return self.get_1D_inputs_spec_dec(self.num_tokens_to_verify + 1)

+ assert all(

+ seq.output_len > 0 for seq in self._sequences_dict.values()

+ ), "Sequence stage (Prefill/Decoding) must be the same in the batch"

+ assert self.is_compact, "BatchBucket is not compact"

+ out = torch.empty([self.current_batch_size], dtype=torch.long)

+ for seq_id, index_in_b in self._sequences_indexes.items():

+ seq: Sequence = self._sequences_dict[seq_id]

+ out[index_in_b] = seq.output_token_id[-1]

+ return out.to(device=self.device)

+

+ # For compatibility

+ def get_block_table_tensor(self) -> torch.Tensor:

+ assert self.is_compact # Debug usage

+ block_table = self.block_tables[: self.current_batch_size]

+ return block_table.to(device=self.device)

+

+ # For compatibility

+ def get_sequence_lengths(self) -> torch.Tensor:

+ assert self.is_compact # Debug usage

+ sequence_lengths = self.seq_lengths[: self.current_batch_size]

+ return sequence_lengths.to(device=self.device)

+

+ # For compatibility

+ @property

+ def fd_inter_tensor(self) -> None:

+ assert self.fd_interm_tensor is not None, "fd_interm_tensor is not provided"

+ return self.fd_interm_tensor

+

+ def __repr__(self) -> str:

+ return f"(sequences_dict={self._sequences_dict}, is_prompts={self.is_prompts})"

diff --git a/colossalai/inference/config.py b/colossalai/inference/config.py

new file mode 100644

index 000000000000..70faf34e36a4

--- /dev/null

+++ b/colossalai/inference/config.py

@@ -0,0 +1,341 @@

+"""

+Our config contains various options for inference optimization, it is a unified API that wraps all the configurations for inference.

+"""

+import logging

+from abc import ABC, abstractmethod

+from dataclasses import dataclass, fields

+from typing import Any, Dict, List, Optional, Union

+

+import torch

+from transformers.generation import GenerationConfig

+

+from colossalai.inference.flash_decoding_utils import FDIntermTensors

+

+GibiByte = 1024**3

+

+logger = logging.Logger(__name__)

+

+_DTYPE_MAPPING = {

+ "fp16": torch.float16,

+ "bf16": torch.bfloat16,

+ "fp32": torch.float32,

+}

+

+_ALLOWED_DTYPES = [torch.float16, torch.bfloat16, torch.float32]

+

+_DEFAULT_PROMPT_TEMPLATES = {

+ "llama": "[INST] <>\nYou are a helpful, respectful and honest assistant. Always answer as helpfully as possible, while being safe. Your answers should not include any harmful, unethical, racist, sexist, toxic, dangerous, or illegal content. Please ensure that your responses are socially unbiased and positive in nature. If a question does not make any sense, or is not factually coherent, explain why instead of answering something not correct. If you don't know the answer to a question, please don't share false information.\n<>\n{input_text}[/INST]",

+ "baichuan": " {input_text} ",

+ "vicuna": "A chat between a curious user and an assistant. The assistant gives helpful, detailed, accurate, uncensored responses to the user input. USER: {input_text}\nASSISTANT: ",

+}

+

+

+class RPC_PARAM(ABC):

+ """

+ NOTE(lry89757) We use rpyc to transport param between client and server.

+ Rpyc only support the type of `POD` in python as the param, so we should take some smart ways to transport the data like tensor or some sophisticated classes.

+ Drawing on the logic of `__setstate__`, `__getstate__`, we will let some classes(will be rpc param later) inherit this base class, and rewrite the to_rpc_param and from_rpc_param. We will invoke `to_rpc_param` in client to pass the params and recover the param in server side by `from_rpc_param`.

+ """

+

+ @abstractmethod

+ def to_rpc_param(self):

+ return NotImplementedError

+

+ @staticmethod

+ @abstractmethod

+ def from_rpc_param():

+ return NotImplementedError

+

+

+@dataclass

+class InputMetaData(RPC_PARAM):

+ """The input info for a single step

+

+ Args:

+ block_tables (torch.Tensor, optional): Sequences' BlockTables Defaults to None.

+ sequence_lengths (torch.Tensor): A tensor containing sequence lengths.

+ fd_inter_tensor (torch.Tensor, optional): A tensor representing intermediate data for flash decoding. Defaults to None.

+ batch_size (int, optional): The current batch size. Defaults to 64.

+ is_prompts (bool, optional): Indicates whether prefill or decoding. Defaults to False(decoding).

+ use_cuda_kernel(bool): Whether to use cuda kernel, faster but lose some precision occasionally

+ use_cuda_graph (bool, optional): Indicates whether to use the CUDA graph. Defaults to False.

+ kv_seq_len (int, optional): Key-value sequence length. Defaults to 512.

+ head_dim (int, optional): Head dimension. Defaults to 32.

+ high_precision(bool, optional): Whether to use float32 for underlying calculations of float16 data to achieve higher precision, Defaults to False.

+ dtype (torch.dtype, optional): The computation type of tensor, Defaults to torch.float32.

+ use_spec_dec (bool): Indicate whether to use speculative decoding.

+ num_tokens_to_verify (int): The number of tokens to verify in speculative decoding. Only valid when `use_spec_dec` is set to True.

+ batch_token_ids (List[List[int]], optional): input_token_ids + output_token_ids of current batch. Only used for `repetition_penalty`, `no_repeat_ngram_size` in sampler process.

+ """

+

+ block_tables: torch.Tensor = None

+ sequence_lengths: torch.Tensor = None

+ fd_inter_tensor: FDIntermTensors = None

+ batch_size: int = 64 # current_batch_size

+ is_prompts: bool = False

+ use_cuda_kernel: bool = False

+ use_cuda_graph: bool = False

+ kv_seq_len: int = 512

+ head_dim: int = 32

+ high_precision: bool = False

+ dtype: torch.dtype = torch.float32

+ use_spec_dec: bool = False

+ num_tokens_to_verify: int = 0

+ batch_token_ids: Optional[

+ List[List[int]]

+ ] = None # for `repetition_penalty`, `no_repeat_ngram_size` in sampler process

+

+ def to_rpc_param(self) -> Dict[str, any]:

+ return {

+ "block_tables": self.block_tables.tolist(),

+ "sequence_lengths": self.sequence_lengths.tolist(),

+ "batch_size": self.batch_size,

+ "is_prompts": self.is_prompts,

+ "use_cuda_kernel": self.use_cuda_kernel,

+ "use_cuda_graph": self.use_cuda_graph,

+ "kv_seq_len": self.kv_seq_len,

+ "head_dim": self.head_dim,

+ "high_precision": self.high_precision,

+ "dtype": str(self.dtype).split(".")[-1],

+ "use_spec_dec": self.use_spec_dec,

+ "num_tokens_to_verify": self.num_tokens_to_verify,

+ "batch_token_ids": self.batch_token_ids,

+ }

+

+ @staticmethod

+ def from_rpc_param(rpc_dict: Dict[str, any]) -> "InputMetaData":

+ """

+ We intentionally don't use `dict.get` method to ensure we pass the right rpc param, or program will show error message

+ """

+ from colossalai.accelerator import get_accelerator

+

+ dtype = getattr(torch, rpc_dict["dtype"])

+ return InputMetaData(

+ block_tables=torch.tensor(

+ rpc_dict["block_tables"], dtype=torch.int, device=get_accelerator().get_current_device()

+ ),

+ sequence_lengths=torch.tensor(

+ rpc_dict["sequence_lengths"], dtype=torch.int, device=get_accelerator().get_current_device()

+ ),

+ batch_size=rpc_dict["batch_size"],

+ is_prompts=rpc_dict["is_prompts"],

+ use_cuda_kernel=rpc_dict["use_cuda_kernel"],

+ use_cuda_graph=rpc_dict["use_cuda_graph"],

+ kv_seq_len=rpc_dict["kv_seq_len"],

+ head_dim=rpc_dict["head_dim"],

+ high_precision=rpc_dict["high_precision"],

+ dtype=dtype,

+ use_spec_dec=rpc_dict["use_spec_dec"],

+ num_tokens_to_verify=rpc_dict["num_tokens_to_verify"],

+ batch_token_ids=rpc_dict["batch_token_ids"],

+ )

+

+ def __repr__(self) -> str:

+ return (

+ f"InputMetaData(block_tables={self.block_tables}, "

+ f"sequence_lengths={self.sequence_lengths}, "

+ f"fd_inter_tensor={self.fd_inter_tensor}, "

+ f"batch_size={self.batch_size}, "

+ f"is_prompts={self.is_prompts}, "

+ f"use_cuda_kernel={self.use_cuda_kernel}, "

+ f"use_cuda_graph={self.use_cuda_graph}, "

+ f"kv_seq_len={self.kv_seq_len}, "

+ f"use_spec_dec={self.use_spec_dec}, "

+ f"num_tokens_to_verify={self.num_tokens_to_verify})"

+ )

+

+

+@dataclass

+class InferenceConfig(RPC_PARAM):

+ """The inference configuration.

+

+ Args:

+ max_batch_size (int): Maximum batch size, defaults to 8.

+ max_output_len (int): Maximum output length, defaults to 256.

+ max_input_len (int): Maximum input length, defaults to 256.

+ dtype (Union[str, torch.dtype]): The data type for weights and activations.

+ kv_cache_dtype (Optional[str]): The data type of kv_cache, defaults to None.

+ prompt_template (Optional[str]): The prompt template for generation, defaults to None.

+ do_sample (bool): Whether to use sampling for generation, defaults to False.

+ beam_width (int): The maximum beam width used to initialize KV Cache, defaults to 1.

+ During generation, the beam width provided as sampling parameter should be less than or equivalent to this value.

+ prefill_ratio (Optional[float]): A controling ratio for prefill and decoding in running list, defaults to 1.2. We will do a step of prefill

+ when the actual value exceeds this ratio.

+ pad_input: Whether to pad all inputs to the max length.

+ early_stopping (Optional[bool]): Whether to stop the generation when all beam hypotheses have finished or not, defaults to False.

+ top_k (Optional[int]): The number of highest probability vocabulary tokens to keep for top-k-filtering, defaults to None.

+ top_p (Optional[float]): The cumulative probability threshold for retaining tokens with a total probability above it, defaults to None.

+ temperature (Optional[float]): Randomness used to control randomization, defaults to 1.0.

+ repetition_penalty (Optional[float]): The parameter that influences the model's treatment of new tokens in relation to their appearance in the prompt and the generated text. Values greater than 1 incentivize the model to introduce new tokens, whereas values less than 1 incentivize token repetition., defaults to 1.0.

+ no_repeat_ngram_size (Optional[int]): If no_repeat_ngram_size > 0, the consecutive tokens of ngram size can only appear once in inference sentences.

+ n_spec_tokens (int): The maximum number of speculating tokens, defaults to None.

+ glimpse_large_kv (bool): Whether to use large KV in drafter model, defaults to False.

+ block_size (int): The number of blocks in a logical block, defaults to 16.

+ tp_size (int): Tensor parallel size, defaults to 1.

+ pp_size (int): Pipeline parallel size, defaults to 1.

+ micro_batch_size (int): the micro batch size, defaults to 1. Only useful when `pp_size` > 1.

+ micro_batch_buffer_size (int): the buffer size for micro batch. Normally, it should be the same as the number of pipeline stages.

+ use_cuda_kernel(bool): Whether to use cuda kernel, faster but lose some precision occasionally

+ use_cuda_graph (bool): Whether to enforce CUDA graph execution. If False, we will disable CUDA graph and always execute the model in eager mode. If True, we will use eager execution in hybrid.

+ max_context_len_to_capture (int): max context len that could be captured by CUDA Graph, per sequence

+ high_precision(Optional[bool]): Whether to use float32 for underlying calculations of float16 data to achieve higher precision, defaults to False.

+ ignore_eos(bool): Whether to ignore the EOS token and continue generating tokens when encountering the EOS token.

+ """

+

+ # NOTE: arrange configs according to their importance and frequency of usage

+

+ # runtime limit

+ max_batch_size: int = 8

+ max_output_len: int = 256

+ max_input_len: int = 256

+

+ # general configs

+ dtype: Union[str, torch.dtype] = torch.float16 # use fp16 by default

+ kv_cache_dtype: Optional[str] = None

+

+ # generation configs

+ prompt_template: Optional[str] = None

+ do_sample: bool = False

+ beam_width: int = 1 # TODO: beam search is not support for now

+ prefill_ratio: Optional[

+ float

+ ] = 1.2 # the ratio of prefill sequences to decoding sequences, we do prefill step once the actual value exceeds ratio

+ pad_input: bool = False

+ early_stopping: Optional[bool] = False

+ top_k: Optional[int] = None

+ top_p: Optional[float] = None

+ temperature: Optional[float] = 1.0

+ no_repeat_ngram_size: Optional[int] = 0

+ repetition_penalty: Optional[float] = 1.0

+

+ # speculative decoding configs

+ max_n_spec_tokens: int = 5

+ glimpse_large_kv: bool = False

+

+ # paged attention configs

+ block_size: int = 16

+

+ # model parallelism configs

+ tp_size: int = 1

+ pp_size: int = 1

+ micro_batch_size: int = 1

+ micro_batch_buffer_size: int = None

+ high_precision: Optional[bool] = False

+

+ # cuda kernel option

+ use_cuda_kernel: bool = False

+

+ # cuda_graph

+ use_cuda_graph: bool = False # NOTE only when we have the graph for specific decoding batch size can we use the cuda graph for inference

+ max_context_len_to_capture: int = 512

+ ignore_eos: bool = False

+

+ def __post_init__(self):

+ self.max_context_len_to_capture = self.max_input_len + self.max_output_len

+ self._verify_config()

+

+ def _verify_config(self) -> None:

+ """

+ Verify the input config

+ """

+ # check dtype

+ if isinstance(self.dtype, str):

+ # convert string dtype to torch dtype

+ assert (

+ self.dtype in _DTYPE_MAPPING

+ ), f"Expected the dtype string argument to be in {list(_DTYPE_MAPPING.keys())} but found an unknown dtype: {self.dtype}"

+ self.dtype = _DTYPE_MAPPING[self.dtype]

+ assert (

+ self.dtype in _ALLOWED_DTYPES

+ ), f"Expected dtype to be in {_ALLOWED_DTYPES} but found an unknown dtype: {self.dtype}"

+

+ if self.kv_cache_dtype:

+ assert (

+ self.use_cuda_kernel and self.kv_cache_dtype == "fp8"

+ ), f"FP8 kv_cache is only supported with use_cuda_kernel open now"

+ self.kv_cache_dtype = torch.uint8

+

+ # skip using casting when the data type is float32

+ if self.dtype == torch.float32:

+ self.high_precision = False

+

+ # check prompt template

+ if self.prompt_template is None:

+ return

+

+ if self.prompt_template in _DEFAULT_PROMPT_TEMPLATES:

+ self.prompt_template = _DEFAULT_PROMPT_TEMPLATES[self.prompt_template]

+ else:

+ # make sure the template can be formatted with input_text

+ assert (

+ "{input_text}" in self.prompt_template

+ ), "The prompt template should contain '{input_text}' for formatting the input text. For example: 'USER: {input_text}\n\nASSISTANT: '"

+

+ def to_generation_config(self, model_config) -> GenerationConfig:

+ meta_config = {

+ "max_length": self.max_input_len + self.max_output_len,

+ "max_new_tokens": self.max_output_len,

+ "early_stopping": self.early_stopping,

+ "do_sample": self.do_sample,

+ "num_beams": self.beam_width,

+ }

+ for type in ["repetition_penalty", "no_repeat_ngram_size", "temperature", "top_k", "top_p"]:

+ if hasattr(self, type):

+ meta_config[type] = getattr(self, type)

+ for type in ["pad_token_id", "bos_token_id", "eos_token_id"]:

+ if hasattr(model_config, type):

+ meta_config[type] = getattr(model_config, type)

+

+ return GenerationConfig.from_dict(meta_config)

+

+ def to_rpc_param(self) -> dict:

+ kwargs = {

+ "dtype": str(self.dtype).split(".")[-1],

+ "max_n_spec_tokens": self.max_n_spec_tokens,

+ "max_batch_size": self.max_batch_size,

+ "max_input_len": self.max_input_len,

+ "max_output_len": self.max_output_len,

+ "tp_size": self.tp_size,

+ "pp_size": self.pp_size,

+ "pad_input": self.pad_input,

+ "early_stopping": self.early_stopping,

+ "do_sample": self.do_sample,

+ "beam_width": self.beam_width,

+ "kv_cache_dtype": str(self.kv_cache_dtype).split(".")[-1],

+ }

+ return kwargs

+

+ @staticmethod

+ def from_rpc_param(rpc_dict: dict) -> "InferenceConfig":

+ """

+ We intentionally don't use `dict.get` method to ensure we pass the right rpc param, or program will show error message

+ """

+ return InferenceConfig(

+ dtype=getattr(torch, rpc_dict["dtype"]),

+ max_n_spec_tokens=rpc_dict["max_n_spec_tokens"],

+ max_batch_size=rpc_dict["max_batch_size"],

+ max_input_len=rpc_dict["max_input_len"],

+ max_output_len=rpc_dict["max_output_len"],

+ tp_size=rpc_dict["tp_size"],

+ pp_size=rpc_dict["pp_size"],

+ pad_input=rpc_dict["pad_input"],

+ early_stopping=rpc_dict["early_stopping"],

+ do_sample=rpc_dict["do_sample"],

+ beam_width=rpc_dict["beam_width"],

+ kv_cache_dtype=getattr(torch, rpc_dict["kv_cache_dtype"], None),

+ )

+

+ @classmethod

+ def from_dict(cls, config_dict: Dict[str, Any]) -> "InferenceConfig":

+ # Get the list of attributes of this dataclass.

+ attrs = [attr.name for attr in fields(cls)]

+ inference_config_args = {}

+ for attr in attrs:

+ if attr in config_dict:

+ inference_config_args[attr] = config_dict[attr]

+ else:

+ inference_config_args[attr] = getattr(cls, attr)

+

+ # Set the attributes from the parsed arguments.

+ inference_config = cls(**inference_config_args)

+ return inference_config

diff --git a/colossalai/inference/core/__init__.py b/colossalai/inference/core/__init__.py

new file mode 100644

index 000000000000..c18c2e59b522

--- /dev/null

+++ b/colossalai/inference/core/__init__.py

@@ -0,0 +1,4 @@

+from .engine import InferenceEngine

+from .request_handler import RequestHandler

+

+__all__ = ["InferenceEngine", "RequestHandler"]

diff --git a/colossalai/inference/core/async_engine.py b/colossalai/inference/core/async_engine.py

new file mode 100644

index 000000000000..6f7ab15d8f58

--- /dev/null

+++ b/colossalai/inference/core/async_engine.py

@@ -0,0 +1,309 @@

+import asyncio

+import logging

+from functools import partial

+from typing import AsyncIterator, Dict, Iterable, List, Optional, Set, Tuple, Type

+

+from colossalai.inference.core.engine import InferenceEngine

+

+# CLI logger

+logging.basicConfig(level=logging.DEBUG, format="%(asctime)s - %(name)s - %(levelname)s - %(message)s")

+logger = logging.getLogger("colossalai-inference")

+

+

+def _raise_exception_on_finish(task: asyncio.Task, request_tracker: "Tracer") -> None:

+ msg = "Task finished unexpectedly. This should never happen! "

+ try:

+ try:

+ task.result()

+ except asyncio.CancelledError:

+ return

+ except Exception as exc:

+ raise RuntimeError(msg + " See stack trace above for the actual cause.") from exc

+ raise RuntimeError(msg)

+ except Exception as exc:

+ request_tracker.propagate_exception(exc)

+ raise exc

+

+

+class RequstStream:

+ """

+ A stream of Output for a request that can be iterated over asynchronously.

+ Attributes: 1.request_id: The id of the request.

+ 2._future: A future that will be set when the request is finished.

+ Methods: set_result and get_result, results will be set when finished, for once, and

+ the `self.future` will be set to done.

+

+ """

+

+ def __init__(self, request_id: int) -> None:

+ self.request_id = request_id

+ self._future = asyncio.Future()

+

+ def set_result(self, result) -> None:

+ """Set final result and signal taht it's ready"""

+ if not self._future.done():

+ self._future.set_result(result)

+

+ async def get_result(self):

+ """Wait for the result to be set and return it."""

+ return await self._future

+

+ @property

+ def finished(self) -> bool:

+ """Check if the stream has finished by checking if the future is done."""

+ return self._future.done()

+

+

+class Tracer:

+ """

+ Recording new requests and finished requests.

+ Attributes: 1._request_streams: We create one stream for each request to trace the output.

+ 2._finished_requests: A queue to store the finished requests.

+ 3._new_requests: New requests will be stored in this queue first, before sending them to the engine.

+ 4.new_requests_event: An event to notify the engine that there are new requests.

+ """

+

+ def __init__(self) -> None:

+ self._request_streams: Dict[int, RequstStream] = {}

+ self._finished_requests: asyncio.Queue[int] = asyncio.Queue()

+ self._new_requests: asyncio.Queue[Tuple[RequstStream, dict]] = asyncio.Queue()

+ self.new_requests_event = None

+

+ def __contains__(self, item):

+ return item in self._request_streams

+

+ def init_event(self):

+ self.new_requests_event = asyncio.Event()

+

+ def propagate_exception(self, exc: Exception, request_id: Optional[int] = None) -> None:

+ """

+ Propagate an exception to request streams (all if request_id is None).

+ """

+ if request_id is not None:

+ self._request_streams[request_id].set_result(exc)

+ else:

+ for stream in self._request_streams.values():

+ stream.set_result(exc)

+

+ def process_finished_request(self, finished_request) -> None:

+ """Process a finished request from the engine."""

+ request_id = finished_request.request_id

+ try:

+ self._request_streams[request_id].set_result(finished_request)

+ except:

+ raise RuntimeError(f"The request_id {request_id} is not found in our stream, please check")

+ self.abort_request(request_id)

+

+ def add_request(self, request_id: int, **engine_add_request_kwargs) -> RequstStream:

+ """

+ Add a request to be sent to the engine on the next background

+ loop iteration.

+ """

+ if request_id in self._request_streams:

+ raise KeyError(f"Request {request_id} already exists.")

+

+ stream = RequstStream(request_id)

+ logger.info(f"Added request {request_id}.")

+ self._new_requests.put_nowait((stream, {"request_id": request_id, **engine_add_request_kwargs}))

+ self.new_requests_event.set()

+

+ return stream

+

+ def abort_request(self, request_id: int, *, verbose: bool = False) -> None:

+ """Abort a request during next background loop iteration."""

+ if verbose:

+ logger.info(f"Aborted request {request_id}.")

+

+ self._finished_requests.put_nowait(request_id)

+

+ if request_id not in self._request_streams or self._request_streams[request_id].finished:

+ # The request has already finished or been aborted.

+ # The requests in new_requests will be aborted when try to get them(if marked aborted)

+ return

+

+ self._request_streams[request_id].set_result(None)

+

+ def get_new_requests(self):

+ """

+ Get new requests from http server.

+ """

+ new_requests: List[Dict] = []

+ finished_requests: Set[int] = set()

+

+ while not self._finished_requests.empty():

+ request_id = self._finished_requests.get_nowait()

+ finished_requests.add(request_id)

+

+ while not self._new_requests.empty():

+ stream, new_request = self._new_requests.get_nowait()

+ if new_request["request_id"] in finished_requests:

+ # The request has been aborted.

+ stream.set_result(None)

+ continue

+ self._request_streams[stream.request_id] = stream

+ new_requests.append(new_request)

+

+ self.new_requests_event.clear()

+

+ return new_requests

+

+ async def wait_for_new_requests(self):

+ await self.new_requests_event.wait()

+

+

+class _AsyncInferenceEngine(InferenceEngine):

+ """

+ Async methods for Inference Engine. This engine is an extension for InferenceEngine, and the additional methods will only be used for

+ Methods: 1. async_step: The async version of Engine.step()

+ """

+

+ async def async_step(self) -> List[str]:

+ """

+ The async version of Engine.step()

+ Performs one decoding iteration and returns newly generated results.

+

+ It first schedules the sequences to be executed in the next iteration.

+ Then, it executes the model and updates the scheduler with the model

+ outputs. Finally, it decodes the sequences and returns the newly

+ generated results.

+ """

+ batch = self.request_handler.schedule()

+ loop = asyncio.get_running_loop()

+

+ # Use run_in_executor to asyncally run the sync method model.forward().

+ logits = await loop.run_in_executor(

+ None,

+ self.model,

+ batch,

+ self.k_cache,

+ self.v_cache,

+ )

+

+ if self.inference_config.pad_input:

+ logits = logits[:, -1, :]

+ self.request_handler.search_tokens(self.generation_config, logits)

+

+ finished_sequences = self.request_handler.update()

+ for sequence in finished_sequences:

+ sequence.output = self.tokenizer.decode(sequence.output_token_id)

+

+ return finished_sequences, self.request_handler.total_requests_in_batch_bucket() > 0

+

+

+class AsyncInferenceEngine:

+ """An asynchronous wrapper for the InferenceEngine class.

+

+ This class is used to wrap the InferenceEngine class to make it asynchronous.

+ It uses asyncio to create a background loop that keeps processing incoming

+ requests. Note that this class does not hold model directly, when incoming a new

+ request, it first called `add_request` and the Tracer will record the request, putting

+ it to the background `InferenceEngine`(done in background loop) to process. You can

+ consider this engine as an interface.

+ """

+

+ _engine_class: Type[_AsyncInferenceEngine] = _AsyncInferenceEngine

+

+ def __init__(self, start_engine_loop: bool = True, **kwargs):

+ self.engine = self._init_engine(**kwargs)

+ self.background_loop = None

+ # reference to the unshielded loop

+ self._background_loop_unshielded = None

+ self.start_engine_loop = start_engine_loop

+ self._request_tracer = Tracer()

+

+ @property

+ def background_loop_status(self):

+ return self.background_loop is not None and not self.background_loop.done()

+

+ def start_background_loop(self):

+ if self.background_loop_status:

+ raise RuntimeError("Existing loop is running")

+

+ self._request_tracer.init_event()

+

+ self._background_loop_unshielded = asyncio.get_event_loop().create_task(self.run_engine_loop())

+ self._background_loop_unshielded.add_done_callback(

+ partial(_raise_exception_on_finish, request_tracker=self._request_tracer)

+ )

+ self.background_loop = asyncio.shield(self._background_loop_unshielded)

+

+ def _init_engine(self, **kwargs):

+ return self._engine_class(**kwargs)

+

+ async def step(self):

+ """

+ Run engine to process requests

+

+ Returns True if there are in-progress requests.

+ """

+ new_requests = self._request_tracer.get_new_requests()

+ for new_request in new_requests:

+ self.engine.add_single_request(**new_request)

+ newly_finished_seqs, has_running_requests = await self.engine.async_step()

+

+ for seq in newly_finished_seqs:

+ self._request_tracer.process_finished_request(seq)

+

+ return has_running_requests

+

+ async def _engine_abort(self, request_ids: Iterable[int]):

+ self.engine.abort_request(request_ids)

+

+ async def abort(self, request_id: int):

+ """

+ Abort a single request

+ """

+ if not self.background_loop_status:

+ raise RuntimeError("Background loop is not running or launched correctly.")

+ return self._abort(request_id)

+

+ def _abort(self, request_id: int):

+ self._request_tracer.abort_request(request_id)

+

+ async def run_engine_loop(self):

+ processing_requests = False

+ while True:

+ if not processing_requests:

+ await self._request_tracer.wait_for_new_requests()

+ processing_requests = await self.step()

+ await asyncio.sleep(0)

+

+ async def add_request(

+ self,

+ request_id: int,

+ prompt: Optional[str],

+ prompt_token_ids: Optional[List[int]] = None,

+ ) -> RequstStream:

+ """

+ Add a request to the background tracker(waiting queue), start the background loop if needed.

+ """

+ if not self.background_loop_status:

+ if self.start_engine_loop:

+ self.start_background_loop()

+ else:

+ raise RuntimeError("Background loop is not running.")

+ stream = self._request_tracer.add_request(

+ request_id,

+ prompt=prompt,

+ prompt_token_ids=prompt_token_ids,

+ )

+ return stream

+

+ async def generate(

+ self,

+ request_id: int,

+ prompt: Optional[str],

+ prompt_token_ids: Optional[List[int]] = None,

+ ) -> AsyncIterator[str]:

+ """

+ Generate output from a request. It receives the request from http server, adds it into the

+ waitting queue of Async Engine and streams the output sequence.

+ """

+ try:

+ stream = await self.add_request(request_id, prompt, prompt_token_ids=prompt_token_ids)

+ return await stream.get_result()

+

+ except (Exception, asyncio.CancelledError) as e:

+ # If there is an exception or coroutine is cancelled, abort the request.

+ self._abort(request_id)

+ raise e

diff --git a/colossalai/inference/core/engine.py b/colossalai/inference/core/engine.py

new file mode 100644

index 000000000000..7b456b8bea4f

--- /dev/null

+++ b/colossalai/inference/core/engine.py

@@ -0,0 +1,756 @@

+import time

+from itertools import count

+from typing import Dict, List, Optional, Tuple, Union

+

+import numpy as np

+import torch

+import torch.nn as nn

+from torch import distributed as dist

+from transformers import (

+ AutoConfig,

+ AutoModelForCausalLM,

+ GenerationConfig,

+ PreTrainedTokenizer,

+ PreTrainedTokenizerFast,

+)

+from transformers.models.llama.modeling_llama import LlamaForCausalLM

+

+from colossalai.accelerator import get_accelerator

+from colossalai.cluster import ProcessGroupMesh

+from colossalai.inference.batch_bucket import BatchBucket

+from colossalai.inference.config import InferenceConfig, InputMetaData

+from colossalai.inference.graph_runner import CUDAGraphRunner

+from colossalai.inference.modeling.policy import model_policy_map

+from colossalai.inference.sampler import search_tokens

+from colossalai.inference.spec import Drafter, GlideInput

+from colossalai.inference.struct import Sequence

+from colossalai.inference.utils import get_model_size, has_index_file

+from colossalai.interface import ModelWrapper

+from colossalai.logging import get_dist_logger

+from colossalai.pipeline.stage_manager import PipelineStageManager

+from colossalai.shardformer import ShardConfig, ShardFormer

+from colossalai.shardformer.policies.base_policy import Policy

+

+from .request_handler import RequestHandler

+

+__all__ = ["InferenceEngine"]

+

+PP_AXIS, TP_AXIS = 0, 1

+

+_supported_models = {

+ "LlamaForCausalLM": LlamaForCausalLM,

+ "BaichuanForCausalLM": AutoModelForCausalLM,

+}

+

+_BATCH_SIZES_TO_CAPTURE = [1, 2, 4] + [8 * i for i in range(1, 33)]

+

+

+class InferenceEngine:

+

+ """

+ InferenceEngine which manages the inference process..

+

+ Args:

+ model_or_path (nn.Module or str): Path or nn.Module of this model.

+ tokenizer Optional[(Union[PreTrainedTokenizer, PreTrainedTokenizerFast])]: Path of the tokenizer to use.

+ inference_config (Optional[InferenceConfig], optional): Store the configuration information related to inference.

+ verbose (bool): Determine whether or not to log the generation process.

+ model_policy ("Policy"): the policy to shardformer model. It will be determined by the model type if not provided.

+ """

+

+ def __init__(

+ self,

+ model_or_path: Union[nn.Module, str],

+ tokenizer: Union[PreTrainedTokenizer, PreTrainedTokenizerFast],

+ inference_config: InferenceConfig,

+ verbose: bool = False,

+ model_policy: Policy = None,

+ ) -> None:

+ self.inference_config = inference_config

+ self.dtype = inference_config.dtype

+ self.high_precision = inference_config.high_precision

+

+ self.verbose = verbose

+ self.logger = get_dist_logger(__name__)

+

+ self.init_model(model_or_path, model_policy)

+

+ self.generation_config = inference_config.to_generation_config(self.model_config)

+

+ self.tokenizer = tokenizer

+ self.tokenizer.pad_token = self.tokenizer.eos_token

+

+ self.request_handler = RequestHandler(self.inference_config, self.model_config)

+ self.k_cache, self.v_cache = self.request_handler.get_kvcache()

+ # DISCUSS maybe move this into batch info?

+

+ self.counter = count()

+

+ self.use_cuda_graph = self.inference_config.use_cuda_graph

+ if self.use_cuda_graph:

+ self.graph_runners: Dict[int, CUDAGraphRunner] = {}

+ self.graph_memory_pool = None # Set during graph capture.

+ if verbose:

+ self.logger.info("Colossal AI CUDA Graph Capture on")

+

+ self.capture_model(self.k_cache, self.v_cache)

+

+ # Model and relatable attrs of speculative decoding will be set by `enable_spec_dec`

+ self.use_spec_dec = False

+ self.drafter_model = None

+ self.drafter = None

+ self.use_glide = False

+ self.n_spec_tokens = self.inference_config.max_n_spec_tokens

+

+ self._verify_args()

+

+ def init_model(self, model_or_path: Union[nn.Module, str], model_policy: Policy = None):

+ """

+ Shard model or/and Load weight

+

+ Args:

+ model_or_path Union[nn.Module, str]: path to the checkpoint or model of transformer format.

+ model_policy (Policy): the policy to replace the model

+ """

+

+ casuallm = None

+ if isinstance(model_or_path, str):

+ try:

+ hf_config = AutoConfig.from_pretrained(model_or_path, trust_remote_code=True)

+ arch = getattr(hf_config, "architectures")[0]

+ if arch in _supported_models.keys():

+ casuallm = _supported_models[arch](hf_config)