diff --git a/.git-blame-ignore-revs b/.git-blame-ignore-revs

index df9bdf0b..45f3f915 100644

--- a/.git-blame-ignore-revs

+++ b/.git-blame-ignore-revs

@@ -1,4 +1,8 @@

# .git-blame-ignore-revs

# created as described in: https://docs.github.com/en/repositories/working-with-files/using-files/viewing-a-file#ignore-commits-in-the-blame-view

+

# black-format files

07517c3353c392106cabae003d589946ea25918a

+82f6df5ed34460374ce7c0fdca089d8caa570b9f

+aa7050f973f36dc204ea495e105b5432223dc68d

+a3dd7e5e9bc0bed404792e9b241f1639ade76f33

diff --git a/.github/ISSUE_TEMPLATE/bug_report.md b/.github/ISSUE_TEMPLATE/bug_report.md

new file mode 100644

index 00000000..9295db3d

--- /dev/null

+++ b/.github/ISSUE_TEMPLATE/bug_report.md

@@ -0,0 +1,30 @@

+---

+name: Bug report

+about: Create a report to help us improve

+title: "[BUG]"

+labels: ''

+assignees: ''

+

+---

+

+**Summary and expected behavior**

+

+**Code for reproduction (using python script or command line interface)**

+```

+# paste your code here

+

+```

+

+**Screenshots or steps for reproduction (using napari GUI)**

+

+**Include relevant logs which are created next to the output dir, name of the dataset, yaml file(s) if encountering reconstruction errors.**

+

+**Expected behavior**

+A clear and concise description of what you expected to happen.

+

+**Environment:**

+

+Operating system:

+Python version:

+Python environment (command line, IDE, Jupyter notebook, etc):

+Micro-Manager/pycromanager version:

diff --git a/.github/ISSUE_TEMPLATE/documentation.md b/.github/ISSUE_TEMPLATE/documentation.md

new file mode 100644

index 00000000..f7917f4f

--- /dev/null

+++ b/.github/ISSUE_TEMPLATE/documentation.md

@@ -0,0 +1,12 @@

+---

+name: Documentation

+about: Help us improve documentation

+title: "[DOC]"

+labels: ''

+assignees: ''

+

+---

+

+**Suggested improvement**

+

+**Optional: Pull request with better documentation**

diff --git a/.github/ISSUE_TEMPLATE/feature_request.md b/.github/ISSUE_TEMPLATE/feature_request.md

new file mode 100644

index 00000000..9e04f113

--- /dev/null

+++ b/.github/ISSUE_TEMPLATE/feature_request.md

@@ -0,0 +1,18 @@

+---

+name: Feature request

+about: Suggest an idea for this project

+title: "[FEATURE]"

+labels: ''

+assignees: ''

+

+---

+

+**Problem**

+

+**Proposed solution**

+

+

+**Alternatives you have considered, if any**

+

+**Additional context**

+Note relevant experimental conditions or datasets

diff --git a/.github/workflows/pr.yml b/.github/workflows/pr.yml

index 16645e0c..9f49a51a 100644

--- a/.github/workflows/pr.yml

+++ b/.github/workflows/pr.yml

@@ -5,8 +5,6 @@ name: lint, style, and tests

on:

pull_request:

- branches:

- - main

jobs:

style:

@@ -77,25 +75,25 @@ jobs:

run: |

isort --check waveorder

- tests:

- needs: [style, isort] # lint

- runs-on: ubuntu-latest

- strategy:

- matrix:

- python-version: ["3.10", "3.11", "3.12"]

+ # needs: [style, isort] # lint

+ # runs-on: ubuntu-latest

+ # strategy:

+ # matrix:

+ # python-version: ["3.10", "3.11", "3.12"]

- steps:

- - uses: actions/checkout@v3

+ # steps:

+ # - uses: actions/checkout@v3

- - uses: actions/setup-python@v4

- with:

- python-version: ${{ matrix.python-version }}

+ # - uses: actions/setup-python@v4

+ # with:

+ # python-version: ${{ matrix.python-version }}

- - name: Install dependencies

- run: |

- python -m pip install --upgrade pip

- pip install ".[dev]"

+ # - name: Install dependencies

+ # run: |

+ # python -m pip install --upgrade pip

+ # pip install ".[all,dev]"

- - name: Test with pytest

- run: |

- pytest -v --cov=./ --cov-report=xml

+ # - name: Test with pytest

+ # run: |

+ # pytest -v

+ # pytest -v --cov=./ --cov-report=xml

diff --git a/.github/workflows/pytests.yml b/.github/workflows/pytests.yml

deleted file mode 100644

index 8028c79d..00000000

--- a/.github/workflows/pytests.yml

+++ /dev/null

@@ -1,48 +0,0 @@

-# This workflow will install Python dependencies, run tests and lint with a single version of Python

-# For more information see: https://help.github.com/actions/language-and-framework-guides/using-python-with-github-actions

-

-name: pytests

-

-on: [push]

-

-jobs:

- build:

-

- runs-on: ubuntu-latest

- strategy:

- matrix:

- python-version: ['3.10', '3.11', '3.12']

-

- steps:

- - name: Checkout repo

- uses: actions/checkout@v2

-

- - name: Set up Python ${{ matrix.python-version }}

- uses: actions/setup-python@v1

- with:

- python-version: ${{ matrix.python-version }}

-

- - name: Install dependencies

- run: |

- python -m pip install --upgrade pip

- pip install .[dev]

-

-# - name: Lint with flake8

-# run: |

-# pip install flake8

-# # stop the build if there are Python syntax errors or undefined names

-# flake8 . --count --select=E9,F63,F7,F82 --show-source --statistics

-# # exit-zero treats all errors as warnings. The GitHub editor is 127 chars wide

-# flake8 . --count --exit-zero --max-complexity=10 --max-line-length=127 --statistics

-

- - name: Test with pytest

- run: |

- pytest -v

- pytest --cov=./ --cov-report=xml

-

-# - name: Upload coverage to Codecov

-# uses: codecov/codecov-action@v1

-# with:

-# flags: unittest

-# name: codecov-umbrella

-# fail_ci_if_error: true

diff --git a/.github/workflows/test.yml b/.github/workflows/test.yml

new file mode 100644

index 00000000..ef91d701

--- /dev/null

+++ b/.github/workflows/test.yml

@@ -0,0 +1,45 @@

+name: test

+

+on: [push]

+

+jobs:

+ test:

+ name: ${{ matrix.platform }} py${{ matrix.python-version }}

+ runs-on: ${{ matrix.platform }}

+ strategy:

+ matrix:

+ platform: [ubuntu-latest, windows-latest, macos-latest]

+ python-version: ["3.10", "3.11"]

+

+ steps:

+ - name: Checkout repo

+ uses: actions/checkout@v3

+

+ - name: Set up Python ${{ matrix.python-version }}

+ uses: actions/setup-python@v4

+ with:

+ python-version: ${{ matrix.python-version }}

+

+ # these libraries enable testing on Qt on linux

+ - uses: tlambert03/setup-qt-libs@v1

+

+ # strategy borrowed from vispy for installing opengl libs on windows

+ - name: Install Windows OpenGL

+ if: runner.os == 'Windows'

+ run: |

+ git clone --depth 1 https://github.com/pyvista/gl-ci-helpers.git

+ powershell gl-ci-helpers/appveyor/install_opengl.ps1

+

+ - name: Install dependencies

+ run: |

+ python -m pip install --upgrade pip

+ python -m pip install setuptools tox tox-gh-actions

+

+ # https://github.com/napari/cookiecutter-napari-plugin/commit/cb9a8c152b68473e8beabf44e7ab11fc46483b5d

+ - name: Test

+ uses: aganders3/headless-gui@v1

+ with:

+ run: python -m tox

+

+ - name: Coverage

+ uses: codecov/codecov-action@v3

diff --git a/.gitignore b/.gitignore

index 29fece40..328ad665 100644

--- a/.gitignore

+++ b/.gitignore

@@ -143,7 +143,7 @@ dmypy.json

# written by setuptools_scm

*/_version.py

-recOrder/_version.py

+waveorder/_version.py

*.autosave

# images

@@ -151,3 +151,7 @@ recOrder/_version.py

*.png

*.tif[f]

*.pdf

+

+# example data

+/examples/data_temp/*

+/logs/*

diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

index 29907895..71f950ec 100644

--- a/.pre-commit-config.yaml

+++ b/.pre-commit-config.yaml

@@ -1,4 +1,3 @@

-

repos:

# basic pre-commit

- repo: https://github.com/pre-commit/pre-commit-hooks

@@ -31,4 +30,4 @@ repos:

- repo: https://github.com/psf/black

rev: 25.1.0

hooks:

- - id: black

+ - id: black

\ No newline at end of file

diff --git a/CITATION.cff b/CITATION.cff

index dc624506..3e593ee1 100644

--- a/CITATION.cff

+++ b/CITATION.cff

@@ -43,8 +43,7 @@ identifiers:

- type: url

value: 'https://www.napari-hub.org/plugins/recOrder-napari'

description: >-

- recOrder-napari plugin for label-free imaging that

- depends on waveOrder library

+ waveorder plugin for label-free imaging (TODO: update URL)

- type: doi

value: 10.1364/BOE.455770

description: >-

diff --git a/LICENSE b/LICENSE

index 5f5c2e02..fda16f73 100644

--- a/LICENSE

+++ b/LICENSE

@@ -1,6 +1,6 @@

BSD 3-Clause License

-Copyright (c) 2019, Chan Zuckerberg Biohub

+Copyright (c) 2025, Chan Zuckerberg Biohub

Redistribution and use in source and binary forms, with or without

modification, are permitted provided that the following conditions are met:

diff --git a/README.md b/README.md

index 9a3e1d53..8ddb4739 100644

--- a/README.md

+++ b/README.md

@@ -1,4 +1,6 @@

-# waveorder

+

+

+

[](https://pypi.org/project/waveorder)

[](https://pypistats.org/packages/waveorder)

@@ -8,15 +10,21 @@

+Label-agnostic computational microscopy of architectural order.

-This computational imaging library enables wave-optical simulation and reconstruction of optical properties that report microscopic architectural order.

+# Overview

-## Computational label-agnostic imaging

+`waveorder` is a generalist framework for label-agnostic computational microscopy of architectural order, i.e., density, alignment, and orientation of biomolecules with the spatial resolution down to the diffraction limit. The framework implements wave-optical simulations and corresponding reconstruction algorithms for diverse label-free and fluorescence computational imaging methods that enable quantitative imaging of the architecture of dynamic cell systems.

+

+Our goal is to enable modular and user-friendly implementations of computational microscopy methods for dynamic imaging across the scales of organelles, cells, and tissues.

+

+

+The framework is described in the following [preprint](https://arxiv.org/abs/2412.09775).

https://github.com/user-attachments/assets/4f9969e5-94ce-4e08-9f30-68314a905db6

+

`waveorder` enables simulations and reconstructions of label-agnostic microscopy data as described in the following [preprint](https://arxiv.org/abs/2412.09775)

- Chandler et al. 2024

-Specifically, `waveorder` enables simulation and reconstruction of 2D or 3D:

+# Computational Microscopy Methods

-1. __phase, projected retardance, and in-plane orientation__ from a polarization-diverse volumetric brightfield acquisition ([QLIPP](https://elifesciences.org/articles/55502)),

+ `waveorder` framework enables simulations and reconstructions of data for diverse one-photon (single-scattering based) computational microscopy methods, summarized below.

-2. __phase__ from a volumetric brightfield acquisition ([2D phase](https://www.osapublishing.org/ao/abstract.cfm?uri=ao-54-28-8566)/[3D phase](https://www.osapublishing.org/ao/abstract.cfm?uri=ao-57-1-a205)),

+## Label-free microscopy

-3. __phase__ from an illumination-diverse volumetric acquisition ([2D](https://www.osapublishing.org/oe/fulltext.cfm?uri=oe-23-9-11394&id=315599)/[3D](https://www.osapublishing.org/boe/fulltext.cfm?uri=boe-7-10-3940&id=349951) differential phase contrast),

+### Quantitative label-free imaging with phase and polarization (QLIPP)

-4. __fluorescence density__ from a widefield volumetric fluorescence acquisition (fluorescence deconvolution).

+Acquisition, calibration, background correction, reconstruction, and applications of QLIPP are described in the following [E-Life Paper](https://elifesciences.org/articles/55502):

-The [examples](https://github.com/mehta-lab/waveorder/tree/main/examples) demonstrate simulations and reconstruction for 2D QLIPP, 3D PODT, 3D fluorescence deconvolution, and 2D/3D PTI methods.

+[](https://www.youtube.com/watch?v=JEZAaPeZhck)

+

+

+ Guo et al. 2020

+

+@article{guo_2020,

+ author = {Guo, Syuan-Ming and Yeh, Li-Hao and Folkesson, Jenny and Ivanov, Ivan E. and Krishnan, Anitha P. and Keefe, Matthew G. and Hashemi, Ezzat and Shin, David and Chhun, Bryant B. and Cho, Nathan H. and Leonetti, Manuel D. and Han, May H. and Nowakowski, Tomasz J. and Mehta, Shalin B.},

+ title = {Revealing architectural order with quantitative label-free imaging and deep learning},

+ journal = {eLife},

+ volume = {9},

+ pages = {e55502},

+ year = {2020},

+ doi = {10.7554/eLife.55502}

+}

+

+

-If you are interested in deploying QLIPP, phase from brightfield, or fluorescence deconvolution for label-agnostic imaging at scale, checkout our [napari plugin](https://www.napari-hub.org/plugins/recOrder-napari), [`recOrder-napari`](https://github.com/mehta-lab/recOrder).

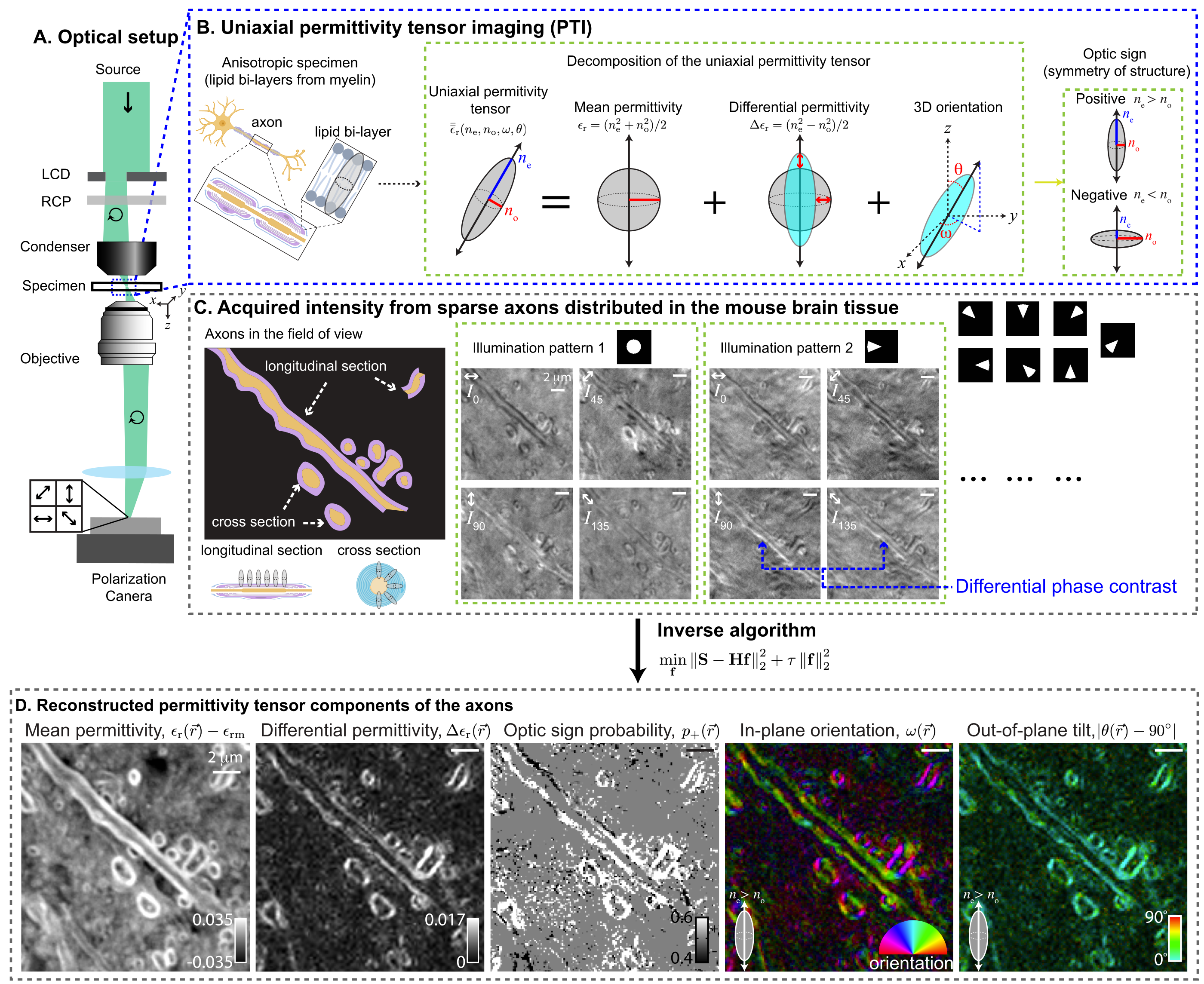

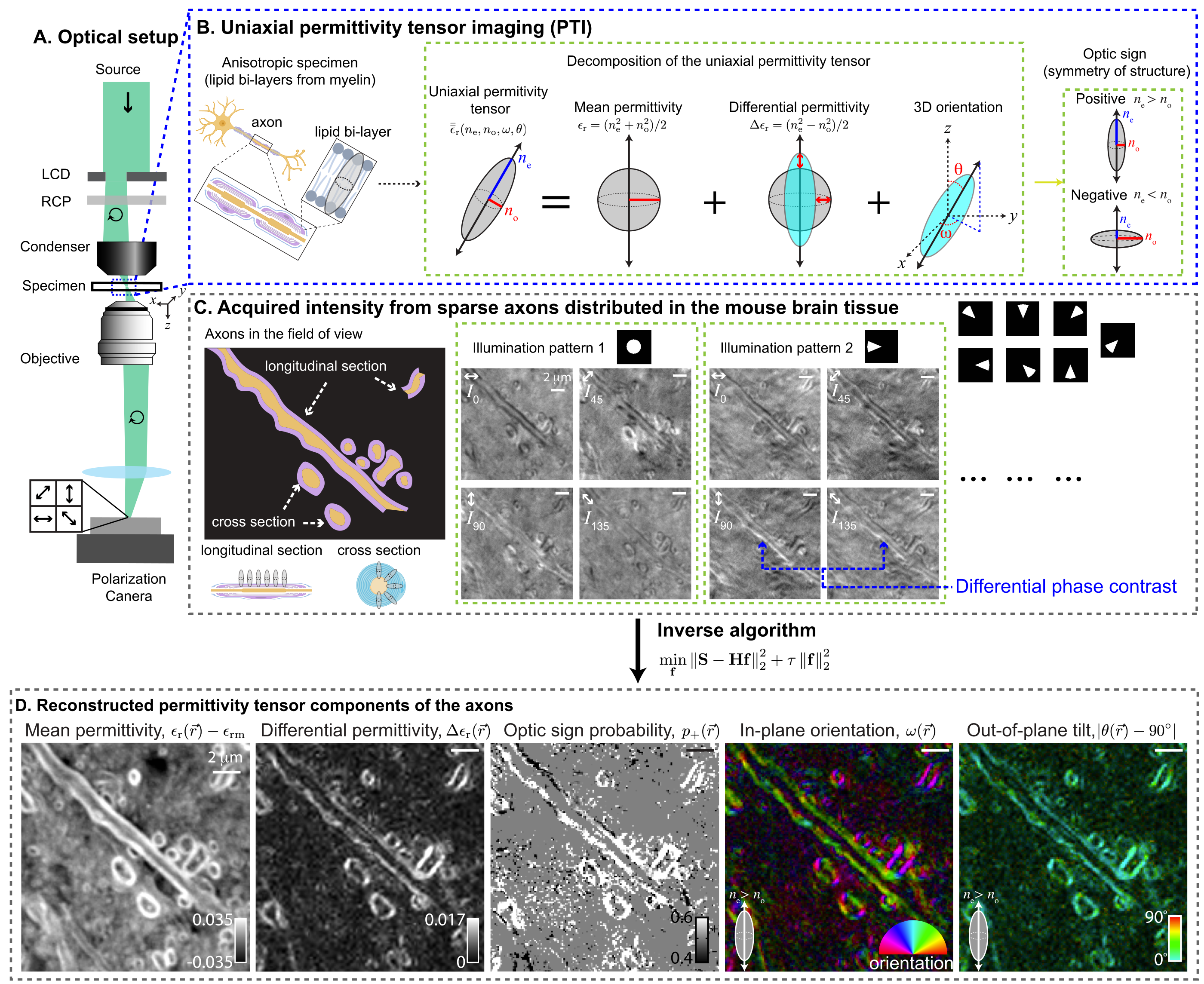

+### Permittivity tensor imaging (PTI)

-## Permittivity tensor imaging

+PTI provides volumetric reconstructions of mean permittivity ($\propto$ material density), differential permittivity ($\propto$ material anisotropy), 3D orientation, and optic sign. The following figure summarizes PTI acquisition and reconstruction with a small optical section of the mouse brain tissue:

-Additionally, `waveorder` enabled the development of a new label-free imaging method, __permittivity tensor imaging (PTI)__, that measures density and 3D orientation of biomolecules with diffraction-limited resolution. These measurements are reconstructed from polarization-resolved images acquired with a sequence of oblique illuminations.

+

The acquisition, calibration, background correction, reconstruction, and applications of PTI are described in the following [paper](https://doi.org/10.1101/2020.12.15.422951) published in Nature Methods:

@@ -70,18 +93,104 @@ The acquisition, calibration, background correction, reconstruction, and applica

-PTI provides volumetric reconstructions of mean permittivity ($\propto$ material density), differential permittivity ($\propto$ material anisotropy), 3D orientation, and optic sign. The following figure summarizes PTI acquisition and reconstruction with a small optical section of the mouse brain tissue:

-

+### Quantitative phase imaging (QPI) from defocus

+__phase__ from a volumetric brightfield acquisition ([2D phase](https://www.osapublishing.org/ao/abstract.cfm?uri=ao-54-28-8566)/[3D phase](https://www.osapublishing.org/ao/abstract.cfm?uri=ao-57-1-a205))

+

+

-## Examples

-The [examples](https://github.com/mehta-lab/waveorder/tree/main/examples) illustrate simulations and reconstruction for 2D QLIPP, 3D phase from brightfield, and 2D/3D PTI methods.

-If you are interested in deploying QLIPP or phase from brightbrield, or fluorescence deconvolution for label-agnostic imaging at scale, checkout our [napari plugin](https://www.napari-hub.org/plugins/recOrder-napari), [`recOrder-napari`](https://github.com/mehta-lab/recOrder).

+

+ Jenkins and Gaylord 2015 (2D QPI from defocus)

+

+ @article{Jenkins:15,

+ author = {Micah H. Jenkins and Thomas K. Gaylord},

+ journal = {Appl. Opt.},

+ keywords = {Phase retrieval; Partial coherence in imaging; Interferometric imaging ; Imaging systems; Microlens arrays; Optical transfer functions; Phase contrast; Spatial resolution; Three dimensional imaging},

+ number = {28},

+ pages = {8566--8579},

+ publisher = {Optica Publishing Group},

+ title = {Quantitative phase microscopy via optimized inversion of the phase optical transfer function},

+ volume = {54},

+ month = {Oct},

+ year = {2015},

+ url = {https://opg.optica.org/ao/abstract.cfm?URI=ao-54-28-8566},

+ doi = {10.1364/AO.54.008566},

+}

+

+

+

+

+ Soto, Rodrigo, and Alieva 2018 (3D QPI from defocus)

+

+@article{Soto:18,

+author = {Juan M. Soto and Jos\'{e} A. Rodrigo and Tatiana Alieva},

+journal = {Appl. Opt.},

+keywords = {Coherence and statistical optics; Image reconstruction techniques; Optical transfer functions; Optical inspection; Three-dimensional microscopy; Acoustooptic modulators; Illumination design; Inverse design; LED sources; Three dimensional imaging; Three dimensional reconstruction},

+number = {1},

+pages = {A205--A214},

+publisher = {Optica Publishing Group},

+title = {Optical diffraction tomography with fully and partially coherent illumination in high numerical aperture label-free microscopy \[Invited\]},

+volume = {57},

+month = {Jan},

+year = {2018},

+url = {https://opg.optica.org/ao/abstract.cfm?URI=ao-57-1-A205},

+doi = {10.1364/AO.57.00A205},

+}

+

+

+

+### QPI with differential phase contrast

+ __phase__ from differential phase contrast

+

+***Work in progress***

+

+* [2D](https://www.osapublishing.org/oe/fulltext.cfm?uri=oe-23-9-11394&id=315599) DPC

+* [3D](https://www.osapublishing.org/boe/fulltext.cfm?uri=boe-7-10-3940&id=349951) DPC

+

+

+## Fluorescence microscopy

+

+### Widefield deconvolution microscopy

+__fluorescence density__ from a widefield volumetric fluorescence acquisition.

+

+

+ Swedlow 2013

+

+@article{ivanov_2024,

+author = {Ivanov, Ivan E. and Hirata-Miyasaki, Eduardo and Chandler, Talon and Cheloor-Kovilakam, Rasmi and Liu, Ziwen and Pradeep, Soorya and Liu, Chad and Bhave, Madhura and Khadka, Sudip and Arias, Carolina and Leonetti, Manuel D. and Huang, Bo and Mehta, Shalin B.},

+title = {Mantis: High-throughput 4D imaging and analysis of the molecular and physical architecture of cells},

+journal = {PNAS Nexus},

+volume = {3},

+number = {9},

+pages = {pgae323},

+year = {2024},

+doi = {10.1093/pnasnexus/pgae323}

+

+

+

## Citation

-Please cite this repository, along with the relevant preprint or paper, if you use or adapt this code. The citation information can be found by clicking "Cite this repository" button in the About section in the right sidebar.

+Please cite this repository, along with the relevant publications and preprints, if you use or adapt this code. The citation information can be found by clicking "Cite this repository" button in the About section in the right sidebar.

## Installation

@@ -98,21 +207,17 @@ Install `waveorder` from PyPI:

pip install waveorder

```

-Use `waveorder` in your scripts:

-

+(Optional) Install all visualization dependencies (napari, jupyter), clone the repository, and run an example script:

```sh

-python

->>> import waveorder

-```

-

-(Optional) Install example dependencies, clone the repository, and run an example script:

-```sh

-pip install waveorder[examples]

+pip install "waveorder[all]"

git clone https://github.com/mehta-lab/waveorder.git

python waveorder/examples/models/phase_thick_3d.py

```

(M1 users) `pytorch` has [incomplete GPU support](https://github.com/pytorch/pytorch/issues/77764),

so please use `export PYTORCH_ENABLE_MPS_FALLBACK=1`

-to allow some operators to fallback to CPU

-if you plan to use GPU acceleration for polarization reconstruction.

\ No newline at end of file

+to allow some operators to fallback to CPU if you plan to use GPU acceleration for polarization reconstruction.

+

+

+## Examples

+The [examples](https://github.com/mehta-lab/waveorder/tree/main/docs/examples) illustrate simulations and reconstruction for 2D QLIPP, 3D phase from brightfield, and 2D/3D PTI methods.

diff --git a/docs/QLIPP.md b/docs/QLIPP.md

new file mode 100644

index 00000000..bf633095

--- /dev/null

+++ b/docs/QLIPP.md

@@ -0,0 +1,58 @@

+# waveorder

+

+`waveorder` is a collection of computational imaging methods. It currently provides QLIPP (quantitative label-free imaging with phase and polarization), phase from defocus, and fluorescence deconvolution.

+

+These are the kinds of data you can acquire with `waveorder` and QLIPP:

+

+https://user-images.githubusercontent.com/9554101/271128301-cc71da57-df6f-401b-a955-796750a96d88.mov

+

+https://user-images.githubusercontent.com/9554101/271128510-aa2180af-607f-4c0c-912c-c18dc4f29432.mp4

+

+## What do I need to use `waveorder`

+`waveorder` is to be used alongside a conventional widefield microscope. For QLIPP, the microscope must be fitted with an analyzer and a universal polarizer:

+

+https://user-images.githubusercontent.com/9554101/273073475-70afb05a-1eb7-4019-9c42-af3e07bef723.mp4

+

+For phase-from-defocus or fluorescence deconvolution methods, the universal polarizer is optional.

+

+The overall structure of `waveorder` is shown in Panel B, highlighting the structure of the graphical user interface (GUI) through a napari plugin and the command-line interface (CLI) that allows users to perform reconstructions.

+

+

+

+## Software Quick Start

+

+(Optional but recommended) install [anaconda](https://www.anaconda.com/products/distribution) and create a virtual environment:

+

+```sh

+conda create -y -n waveorder python=3.10

+conda activate waveorder

+```

+

+Install `waveorder` with acquisition dependencies

+(napari with PyQt6 and pycro-manager):

+

+```sh

+pip install waveorder[all]

+```

+

+Install `waveorder` without napari, QtBindings (PyQt/PySide) and pycro-manager dependencies:

+

+```sh

+pip install waveorder

+```

+

+Open `napari` with `waveorder`:

+

+```sh

+napari -w waveorder

+```

+

+For more help, see [`waveorder`'s documentation](https://github.com/mehta-lab/waveorder/tree/main/docs). To install `waveorder`

+on a microscope, see the [microscope installation guide](https://github.com/mehta-lab/waveorder/blob/main/docs/microscope-installation-guide.md).

+

+## Dataset

+

+[Slides](https://doi.org/10.5281/zenodo.5135889) and a [dataset](https://doi.org/10.5281/zenodo.5178487) shared during a workshop on QLIPP can be found on Zenodo, and the napari plugin's sample contributions (`File > Open Sample > waveorder` in napari).

+

+[](https://doi.org/10.5281/zenodo.5178487)

+[](https://doi.org/10.5281/zenodo.5135889)

diff --git a/docs/README.md b/docs/README.md

new file mode 100644

index 00000000..526d6ff0

--- /dev/null

+++ b/docs/README.md

@@ -0,0 +1,27 @@

+# Welcome to `waveorder`'s documentation

+

+We have organized our documentation by user type and intended task.

+

+## Software users

+

+**Reconstruct existing data:** start with the [reconstruction guide](./reconstruction-guide.md) and consult the [data schema](./data-schema.md) for `waveorder`'s data format.

+

+**Reconstruct with a GUI:** start with the [plugin's reconstruction guide](./napari-plugin-guide.md#reconstruction-tab).

+

+**Integrate `waveorder` into my software:** start with [`examples/`](./examples/)

+

+## Hardware users

+

+**Buy hardware for a polarized-light installation:** start with the [buyer's guide](./buyers-guide.md).

+

+**Install `waveorder` on my microscope:** start with the [microscope installation guide](./microscope-installation-guide.md).

+

+**Use the `napari plugin` to calibrate:** start with the [plugin guide](./napari-plugin-guide.md).

+

+**Understand `waveorder`'s calibration routine**: read the [calibration guide](./calibration-guide.md).

+

+## Software developers

+

+**Set up a development environment and test `waveorder`**: start with the [development guide](./development-guide.md).

+

+**Report an error in the documentation or code:** [open an issue](https://github.com/mehta-lab/waveorder/issues/new/choose) or [send us an email](mailto:shalin.mehta@czbiohub.org,talon.chandler@czbiohub.org). We appreciate your help!

diff --git a/docs/__init__.py b/docs/__init__.py

new file mode 100644

index 00000000..e69de29b

diff --git a/docs/buyers-guide.md b/docs/buyers-guide.md

new file mode 100644

index 00000000..a6e5b324

--- /dev/null

+++ b/docs/buyers-guide.md

@@ -0,0 +1,39 @@

+# Buyer's Guide

+

+## Quantitative phase imaging:

+

+You can use a transmitted light source (LED or a lamp) and a condenser commonly available on almost all microscopes. In addition to the transmitted light imaging path, you will need a motorized stage for acquiring through-focus image stacks.

+

+## Quantitative polarization imaging (PolScope):

+

+The following list of components assumes that you already have a transmitted light source (LED or a lamp) and a condenser.

+

+Buyers have two options:

+1. buy a complete hardware kit from the OpenPolScope project, or

+2. assemble your own kit piece by piece.

+

+### Buy a kit from the OpenPolScope project

+

+- Read about the [OpenPolScope Hardware Kit](https://openpolscope.org/pages/OPS_Hardware.htm).

+- Complete the [OpenPolScope information request form](https://openpolscope.org/pages/Info_Request_Form.htm).

+

+### Buy individual components

+

+The components are listed in the order in which they process light. See the build video here to see how to assemble these components on your microscope.

+

+https://github.com/user-attachments/assets/a0a8bffb-bf81-4401-9ace-3b4955436b57

+

+| Part | Approximate Price | Notes |

+|--------------------------|-------------------|-----------------------------|

+| Illumination filter | $200 | We suggest [a Thorlabs CWL = 530 nm, FWHM = 10 nm notch filter](https://www.thorlabs.com/thorproduct.cfm?partnumber=FBH530-10).|

+| Circular polarizer | $350 | We suggest [a Thorlabs 532 nm, left-hand circular polarizer](https://www.thorlabs.com/thorproduct.cfm?partnumber=CP1L532).|

+| Liquid crystal compensator | $6,000 | Meadowlark optics LVR-42x52mm-VIS-ASSY or LVR-50x60mm-VIS-POL-ASSY. Although near-variants are listed in the [Meadowlowlark catalog](https://www.meadowlark.com/product/liquid-crystal-variable-retarder/), this is a custom part with two liquid crystals in a custom housing. [Contact Meadowlark](https://www.meadowlark.com/contact-us/) for a quote.|

+| Liquid crystal control electronics | $2,000 | [Meadowlark optics D5020-20V](https://www.meadowlark.com/product/liquid-crystal-digital-interface-controller/). Choose the high-voltage 20V version.

+| Liquid crystal adapter | $25-$500 | A 3D printed part that aligns the liquid crystal compensator in a microscope stand's illumination path. Check for your stand among the [OpenPolScope `.stl` files](https://github.com/amitabhverma/Microscope-LC-adapters/tree/main/stl_files) or [contact us](compmicro@czbiohub.org) for more options.|

+| Circular analyzer (opposite handedness) | $350 | We suggest [a Thorlabs 532 nm, right-hand circular polarizer](https://www.thorlabs.com/thorproduct.cfm?partnumber=CP1R532).|

+

+If you need help selecting or assembling the components, please start an issue on this GitHub repository or contact us at compmicro@czbiohub.org.

+

+## Quantitative phase and polarization imaging (QLIPP):

+

+Combining the Z-stage and the PolScope components listed above enables joint phase and polarization imaging with `waveorder`.

diff --git a/docs/calibration-guide.md b/docs/calibration-guide.md

new file mode 100644

index 00000000..3692025e

--- /dev/null

+++ b/docs/calibration-guide.md

@@ -0,0 +1,64 @@

+# Calibration guide

+This guide describes `waveorder`'s calibration routine with details about its goals, parameters, and evaluation metrics.

+

+## Why calibrate?

+

+`waveorder` sends commands via Micro-Manager (or a TriggerScope) to apply voltages to the liquid crystals which modify the polarization of the light that illuminates the sample. `waveorder` could apply a fixed set of voltages so the user would never have to worry about these details, but this approach leads to extremely poor performance because

+

+- the sample, the sample holder, lenses, dichroics, and other optical elements introduce small changes in polarization, and

+- the liquid crystals' voltage response drifts over time.

+

+Therefore, recalibrating the liquid crystals regularly (definitely between imaging sessions, often between different samples) is essential for acquiring optimal images.

+

+## Finding the extinction state

+

+Every calibration starts with a routine that finds the **extinction state**: the polarization state (and corresponding voltages) that minimizes the intensity that reaches the camera. If the analyzer is a right-hand-circular polarizer, then the extinction state is the set of voltages that correspond to left-hand-circular light in the sample.

+

+## Setting a goal for the remaining states: swing

+

+After finding the circular extinction state, the calibration routine finds the remaining states. The **swing** parameter sets the target ellipticity of the remaining states and is best understood using [the Poincare sphere](https://en.wikipedia.org/wiki/Unpolarized_light#Poincar%C3%A9_sphere), a diagram that organizes all pure polarization states onto the surface of a sphere.

+

+

+

+On the Poincare sphere, the extinction state corresponds to the north pole, and the swing value corresponds to the targeted line of [colatitude](https://en.m.wikipedia.org/wiki/File:Spherical_Coordinates_%28Colatitude,_Longitude%29.svg) for the remaining states. For example, a swing value of 0.25 (above left) sets the targeted polarization states to the states on the equator: a set of linear polarization states. Similarly, a swing value of 0.125 (above right) sets the targeted polarization states to the states on the line of colatitude 45 degrees ( $\pi$/4 radians) away from the north pole: a set of elliptical polarization states.

+

+The Poincare sphere is also useful for calculating the ratio of intensities measured before and after an analyzer illuminated with a polarized beam. First, find the point on the Poincare sphere that corresponds to the analyzer; in our case we have a right-circular analyzer corresponding to the south pole. Next, find the point that corresponds to the polarization state of the light incident on the analyzer; this could be any arbitrary point on the Poincare sphere. To find the ratio of intensities before and after the analyzer $I/I_0$, find the great-circle angle between the two points on the Poincare sphere, $\alpha$, and calculate $I/I_0 = \cos^2(\alpha/2)$. As expected, points that are close together transmit perfectly ( $\alpha = 0$ implies $I/I_0 = 1$), while antipodal points lead to extinction ( $\alpha = \pi$ implies $I/I_0 = 0$).

+

+This geometric construction illustrates that all non-extinction polarization states have the same intensity after the analyzer because they live on the same line of colatitude and have the same great-circle angle to the south pole (the analyzer). We use this fact to help us find our non-extinction states.

+

+Practically, we find our first non-extinction state immediately using the liquid crystal manufacturer's measurements from the factory. In other words, we apply a fixed voltage offset to the extinction-state voltages to find the first non-extinction state, and this requires no iteration or optimization. To find the remaining non-extinction states, we keep the polarization orientation fixed and search through neighboring states with different ellipticity to find states that transmit the same intensity as the first non-extinction state.

+

+## Evaluating a calibration: extinction ratio

+

+At the end of a calibration we report the **extinction ratio**, the ratio of the largest and smallest intensities that the imaging system can transmit above background. This metric measures the quality of the entire optical path including the liquid crystals and their calibrated states, and all depolarization, scattering, or absorption caused by optical elements in the light path will reduce the extinction ratio.

+

+## Calculating extinction ratio from measured intensities (advanced topic)

+

+To calculate the extinction ratio, we could optimize the liquid crystal voltages to maximize measured intensity then calculate the ratio of that result with the earlier extinction intensity, but this approach requires a time-consuming optimization and it does not characterize the quality of the calibrated states of the liquid crystals.

+

+Instead, we estimate the extinction ratio from the intensities we measure during the calibration process. Specifically, we measure the black-level intensity $I_{\text{bl}}$, the extinction intensity $I_{\text{ext}}$, and the intensity under the first elliptical state $I_{\text{ellip}}(S)$ where $S$ is the swing. We proceed to algebraically express the extinction ratio in terms of these three quantities.

+

+We can decompose $I_{\text{ellip}}(S)$ into a constant term $I_{\text{ellip}}(0) = I_{\text{ext}}$, and a modulation term given by

+

+$$I_{\text{ellip}}(S) = I_{\text{mod}}\sin^2(\pi S) + I_{\text{ext}},\qquad\qquad (1)$$

+where $I_{\text{mod}}$ is the modulation depth, and the $\sin^2(\pi S)$ term can be understood using the Poincare sphere (the intensity behind the circular analyzer is proportional to $\cos^2(\alpha/2)$ and for a given swing we have $\alpha = \pi - 2\pi S$ so $\cos^2(\frac{\pi - 2\pi S}{2}) = \sin^2(\pi S)$ ).

+

+Next, we decompose $I_{\text{ext}}$ into the sum of two terms, the black level intensity and a leakage intensity $I_{\text{leak}}$

+$$I_{\text{ext}} = I_{\text{bl}} + I_{\text{leak}}.\qquad\qquad (2)$$

+

+The following diagram clarifies our definitions and shows how the measured $I_{\text{ellip}}(S)$ depends on the swing (green line).

+

+

+

+The extinction ratio is the ratio of the largest and smallest intensities that the imaging system can transmit above background, which is most easily expressed in terms of $I_{\text{mod}}$ and $I_{\text{leak}}$

+$$\text{Extinction Ratio} = \frac{I_{\text{mod}} + I_{\text{leak}}}{I_{\text{leak}}}.\qquad\qquad (3)$$

+

+Substituting Eqs. (1) and (2) into Eq. (3) gives the extinction ratio in terms of the measured intensities

+$$\text{Extinction Ratio} = \frac{1}{\sin^2(\pi S)}\frac{I_{\text{ellip}}(S) - I_{\text{ext}}}{I_{\text{ext}} - I_{\text{bl}}} + 1.$$

+

+## Summary: `waveorder`'s step-by-step calibration procedure

+1. Close the shutter, measure the black level, then reopen the shutter.

+2. Find the extinction state by finding voltages that minimize the intensity that reaches the camera.

+3. Use the swing value to immediately find the first elliptical state, and record the intensity on the camera.

+4. For each remaining elliptical state, keep the polarization orientation fixed and optimize the voltages to match the intensity of the first elliptical state.

+5. Store the voltages and calculate the extinction ratio.

diff --git a/docs/data-schema.md b/docs/data-schema.md

new file mode 100644

index 00000000..a92bc72f

--- /dev/null

+++ b/docs/data-schema.md

@@ -0,0 +1,47 @@

+# Data schema

+

+This document defines the standard for data acquired with `waveorder`.

+

+## Raw directory organization

+

+Currently, we structure raw data in the following hierarchy:

+

+```text

+working_directory/ # commonly YYYY_MM_DD_exp_name, but not enforced

+├── polarization_calibration_0.txt

+│ ...

+├── polarization_calibration_.txt # i calibration repeats

+│

+├── bg_0

+│ ...

+├── bg_ # j background repeats

+│ ├── background.zarr

+│ ├── polarization_calibration.txt # copied into each bg folder

+│ ├── reconstruction.zarr

+│ ├── reconstruction_settings.yml # for use with `waveorder reconstruct`

+│ └── transfer_function.zarr # for use with `waveorder apply-inv-tf`

+│

+├── _snap_0

+├── _snap_1

+│ ├── raw_data.zarr

+│ ├── reconstruction.zarr

+│ ├── reconstruction_settings.yml

+│ └── transfer_function.zarr

+│ ...

+├── _snap_ # k repeats with the first acquisition name

+│ ├── raw_data.zarr

+│ ├── reconstruction.zarr

+│ ├── reconstruction_settings.yml

+│ └── transfer_function.zarr

+│ ...

+│

+├── _snap_0 # l different acquisition names

+│ ...

+├── _snap_ # m repeats for this acquisition name

+ ├── raw_data.zarr

+ ├── reconstruction.zarr

+ ├── reconstruction_settings.yml

+ └── transfer_function.zarr

+```

+

+Each `.zarr` contains an [OME-NGFF v0.4](https://ngff.openmicroscopy.org/0.4/) in HCS format with a single field of view.

diff --git a/docs/development-guide.md b/docs/development-guide.md

new file mode 100644

index 00000000..4d16fdec

--- /dev/null

+++ b/docs/development-guide.md

@@ -0,0 +1,105 @@

+# `waveorder` development guide

+

+## Install `waveorder` for development

+

+1. Install [conda](https://github.com/conda-forge/miniforge) and create a virtual environment:

+

+ ```sh

+ conda create -y -n waveorder python=3.10

+ conda activate waveorder

+ ```

+

+2. Clone the `waveorder` directory:

+

+ ```sh

+ git clone https://github.com/mehta-lab/waveorder.git

+ ```

+

+3. Install `waveorder` in editable mode with development dependencies

+

+ ```sh

+ cd waveorder

+ pip install -e ".[all,dev]"

+ ```

+

+## Set up a development environment

+

+### Code linting

+

+We are not currently specifying a code linter as most modern Python code editors already have their own. If not, add a plugin to your editor to help catch bugs pre-commit!

+

+### Code formatting

+

+We use `black` to format Python code, and a specific version is installed as a development dependency. Use the `black` in the `waveorder` virtual environment, either from commandline or the editor of your choice.

+

+> *VS Code users*: Install the [Black Formatter](https://marketplace.visualstudio.com/items?itemName=ms-python.black-formatter) plugin. Press `^/⌘ ⇧ P` and type 'format document with...', choose the Black Formatter and start formatting!

+

+### Docstring style

+

+The [NumPy style](https://numpydoc.readthedocs.io/en/latest/format.html) docstrings are used in `waveorder`.

+

+> *VS Code users*: [this popular plugin](https://marketplace.visualstudio.com/items?itemName=njpwerner.autodocstring) helps auto-generate most popular docstring styles (including `numpydoc`).

+

+## Run automated tests

+

+From within the `waveorder` directory run:

+

+```sh

+pytest

+```

+

+Running `pytest` for the first time will download ~50 MB of test data from Zenodo, and subsequent runs will reuse the downloaded data.

+

+## Run manual tests

+

+Although many of `waveorder`'s tests are automated, many features require manual testing. The following is a summary of features that need to be tested manually before release:

+

+* Install a compatible version of Micro-Manager and check that `waveorder` can connect.

+* Perform calibrations with and without an ROI; with and without a shutter configured in Micro-Manager, in 4- and 5-state modes; and in MM-Voltage, MM-Retardance, and DAC modes (if the TriggerScope is available).

+* Test "Load Calibration" and "Calculate Extinction" buttons.

+* Test "Capture Background" button.

+* Test the "Acquire Birefringence" button on a background FOV. Does a background-corrected background acquisition give random orientations?

+* Test the four "Acquire" buttons with varied combinations of 2D/3D, background correction settings, "Phase from BF" checkbox, and regularization parameters.

+* Use the data you collected to test "Offline" mode reconstructions with varied combinations of parameters.

+

+## GUI development

+

+We use `QT Creator` for large parts of `waveorder`'s GUI. To modify the GUI, install `QT Creator` from [its website](https://www.qt.io/product/development-tools) or with `brew install --cask qt-creator`

+

+Open `./waveorder/plugin/gui.ui` in `QT Creator` and make your changes.

+

+Next, convert the `.ui` to a `.py` file with:

+

+```sh

+pyuic5 -x gui.ui -o gui.py

+```

+

+Note: `pyuic5` is installed alongside `PyQt5`, so you can expect to find it installed in your `waveorder` conda environement.

+

+Finally, change the `gui.py` file's to import `qtpy` instead of `PyQt5` to adhere to [napari plugin best practices](https://napari.org/stable/plugins/best_practices.html#don-t-include-pyside2-or-pyqt5-in-your-plugin-s-dependencies).

+On macOS, you can modify the file in place with:

+

+```sh

+sed -i '' 's/from PyQt5/from qtpy/g' gui.py

+```

+

+> This is specific for BSD `sed`, omit `''` with GNU.

+

+Note: although much of the GUI is specified in the generated `gui.py` file, the `main_widget.py` file makes extensive modifications to the GUI.

+

+## Make `git blame` ignore formatting commits

+

+**Note:** `git --version` must be `>=2.23` to use this feature.

+

+If you would like `git blame` to ignore formatting commits, run this line:

+

+```sh

+ git config --global blame.ignoreRevsFile .git-blame-ignore-revs

+```

+

+The `\.git-blame-ignore-revs` file contains a list of commit hashes corresponding to formatting commits.

+If you make a formatting commit, please add the commit's hash to this file.

+

+## Pre-release checklist

+- merge `README.md` figures to `main`, then update the links to point to these uploaded figures. We do not upload figures to PyPI, so without this step the README figure will not appear on PyPI or napari-hub.

+- update version numbers and links in [the microscope dependency guide](./microscope-installation-guide.md).

diff --git a/docs/examples/README.md b/docs/examples/README.md

new file mode 100644

index 00000000..96278b6d

--- /dev/null

+++ b/docs/examples/README.md

@@ -0,0 +1,11 @@

+`waveorder` is undergoing a significant refactor, and this `examples/` folder serves as a good place to understand the current state of the repository.

+

+Most examples require `pip install waveorder[all]` for `napari` and `jupyter`. Visit the [napari installation guide](https://napari.org/dev/tutorials/fundamentals/installation.html) if napari installation fails.

+

+| Folder | Requires | Description |

+|------------------|----------------------------|-------------------------------------------------------------------------------------------------------|

+| `configs/` | `pip install waveorder[all]` | Demonstrates `waveorder`'s config-file-based command-line interface. |

+| `models/` | `pip install waveorder[all]` | Demonstrates the latest functionality of `waveorder` through simulations and reconstructions using various models. |

+| `maintenance/` | `pip install waveorder` | Examples of computational imaging methods enabled by functionality of waveorder; scripts are maintained with automated tests. |

+| `visuals/` | `pip install waveorder[all]` | Visualizations of transfer functions and Green's tensors. |

+| `deprecated/` | `pip install waveorder[all]`, complete datasets | Provides examples of real-data reconstructions; serves as documentation and is not actively maintained. |

\ No newline at end of file

diff --git a/docs/examples/configs/README.md b/docs/examples/configs/README.md

new file mode 100644

index 00000000..c02d993a

--- /dev/null

+++ b/docs/examples/configs/README.md

@@ -0,0 +1,70 @@

+# `waveorder` CLI examples

+

+`waveorder` uses a configuration-file-based command-line inferface (CLI) to

+calculate transfer functions and apply these transfer functions to datasets.

+

+This page demonstrates `waveorder`'s CLI.

+

+## Getting started

+

+### 1. Check your installation

+First, [install `waveorder`](../docs/software-installation-guide.md) and run

+```bash

+waveorder

+```

+in a shell. If `waveorder` is installed correctly, you will see a usage string and

+```

+waveorder: Computational Toolkit for Label-Free Imaging

+```

+

+### 2. Download and convert a test dataset

+Next, [download the test data from zenodo (47 MB)](https://zenodo.org/record/6983916/files/recOrder_test_data.zip?download=1), and convert a dataset to the latest version of `.zarr` with

+```

+cd /path/to/

+iohub convert -i /path/to/test_data/2022_08_04_recOrder_pytest_20x_04NA/2T_3P_16Z_128Y_256X_Kazansky_1/

+-o ./dataset.zarr

+```

+

+You can view the test dataset with

+```

+napari ./dataset.zarr --plugin waveorder

+```

+

+### 3. Run a reconstruction

+Run an example reconstruction with

+```

+waveorder reconstruct ./dataset.zarr/0/0/0 -c /path/to/waveorder/examples/settings/birefringence-and-phase.yml -o ./reconstruction.zarr

+```

+then view the reconstruction with

+```

+napari ./reconstruction.zarr --plugin waveorder

+```

+

+Try modifying the configuration file to see how the regularization parameter changes the results.

+

+## FAQ

+1. **Q: Which configuration file should I use?**

+

+ If you are acquiring:

+

+ **3D data with calibrated liquid-crystal polarizers via `waveorder`** use `birefringence.yml`.

+

+ **3D fluorescence data** use `fluorescence.yml`.

+

+ **3D brightfield data** use `phase.yml`.

+

+ **Multi-modal data**, start by reconstructing the individual modaliities, each with a single config file and CLI call. Then combine the reconstructions by ***TODO: @Ziwen do can you help me append to the zarrs to help me fix this? ***

+

+2. **Q: Should I use `reconstruction_dimension` = 2 or 3?

+

+ If your downstream processing requires 3D information or if you're unsure, then you should use `reconstruction_dimension = 3`. If your sample is very thin compared to the depth of field of the microscope, if you're in a noise-limited regime, or if your downstream processing requires 2D information, then you should use `reconstruction_dimension = 2`. Empirically, we have found that 2D reconstructions reduce the noise in our reconstructions because it uses 3D information to make a single estimate for each pixel.

+

+3. **Q: What regularization parameter should I use?**

+

+ We recommend starting with the defaults then testing over a few orders of magnitude and choosing a result that isn't too noisy or too smooth.

+

+### Developers note

+

+These configuration files are automatically generated when the tests run. See `/tests/cli_tests/test_settings.py` - `test_generate_example_settings`.

+

+To keep these settings up to date, run `pytest` locally when `cli/settings.py` changes.

diff --git a/docs/examples/configs/birefringence-and-phase.yml b/docs/examples/configs/birefringence-and-phase.yml

new file mode 100644

index 00000000..6c53dfe6

--- /dev/null

+++ b/docs/examples/configs/birefringence-and-phase.yml

@@ -0,0 +1,31 @@

+input_channel_names:

+- State0

+- State1

+- State2

+- State3

+time_indices: all

+reconstruction_dimension: 3

+birefringence:

+ transfer_function:

+ swing: 0.1

+ apply_inverse:

+ wavelength_illumination: 0.532

+ background_path: ''

+ remove_estimated_background: false

+ flip_orientation: false

+ rotate_orientation: false

+phase:

+ transfer_function:

+ wavelength_illumination: 0.532

+ yx_pixel_size: 0.325

+ z_pixel_size: 2.0

+ z_padding: 0

+ index_of_refraction_media: 1.3

+ numerical_aperture_detection: 1.2

+ numerical_aperture_illumination: 0.5

+ invert_phase_contrast: false

+ apply_inverse:

+ reconstruction_algorithm: Tikhonov

+ regularization_strength: 0.001

+ TV_rho_strength: 0.001

+ TV_iterations: 1

diff --git a/docs/examples/configs/birefringence.yml b/docs/examples/configs/birefringence.yml

new file mode 100644

index 00000000..ed50536c

--- /dev/null

+++ b/docs/examples/configs/birefringence.yml

@@ -0,0 +1,16 @@

+input_channel_names:

+- State0

+- State1

+- State2

+- State3

+time_indices: all

+reconstruction_dimension: 3

+birefringence:

+ transfer_function:

+ swing: 0.1

+ apply_inverse:

+ wavelength_illumination: 0.532

+ background_path: ''

+ remove_estimated_background: false

+ flip_orientation: false

+ rotate_orientation: false

diff --git a/docs/examples/configs/fluorescence.yml b/docs/examples/configs/fluorescence.yml

new file mode 100644

index 00000000..3e84d884

--- /dev/null

+++ b/docs/examples/configs/fluorescence.yml

@@ -0,0 +1,17 @@

+input_channel_names:

+- GFP

+time_indices: all

+reconstruction_dimension: 3

+fluorescence:

+ transfer_function:

+ yx_pixel_size: 0.325

+ z_pixel_size: 2.0

+ z_padding: 0

+ index_of_refraction_media: 1.3

+ numerical_aperture_detection: 1.2

+ wavelength_emission: 0.507

+ apply_inverse:

+ reconstruction_algorithm: Tikhonov

+ regularization_strength: 0.001

+ TV_rho_strength: 0.001

+ TV_iterations: 1

diff --git a/docs/examples/configs/phase.yml b/docs/examples/configs/phase.yml

new file mode 100644

index 00000000..381b487e

--- /dev/null

+++ b/docs/examples/configs/phase.yml

@@ -0,0 +1,19 @@

+input_channel_names:

+- BF

+time_indices: all

+reconstruction_dimension: 3

+phase:

+ transfer_function:

+ wavelength_illumination: 0.532

+ yx_pixel_size: 0.325

+ z_pixel_size: 2.0

+ z_padding: 0

+ index_of_refraction_media: 1.3

+ numerical_aperture_detection: 1.2

+ numerical_aperture_illumination: 0.5

+ invert_phase_contrast: false

+ apply_inverse:

+ reconstruction_algorithm: Tikhonov

+ regularization_strength: 0.001

+ TV_rho_strength: 0.001

+ TV_iterations: 1

diff --git a/examples/documentation/PTI_experiment/PTI_Experiment_Recon3D_anisotropic_target_small.py b/docs/examples/deprecated/PTI_experiment/PTI_Experiment_Recon3D_anisotropic_target_small.py

similarity index 100%

rename from examples/documentation/PTI_experiment/PTI_Experiment_Recon3D_anisotropic_target_small.py

rename to docs/examples/deprecated/PTI_experiment/PTI_Experiment_Recon3D_anisotropic_target_small.py

diff --git a/examples/documentation/PTI_experiment/PTI_full_FOV_anisotropic_target.ipynb b/docs/examples/deprecated/PTI_experiment/PTI_full_FOV_anisotropic_target.ipynb

similarity index 100%

rename from examples/documentation/PTI_experiment/PTI_full_FOV_anisotropic_target.ipynb

rename to docs/examples/deprecated/PTI_experiment/PTI_full_FOV_anisotropic_target.ipynb

diff --git a/examples/documentation/PTI_experiment/PTI_full_FOV_cardiac_muscle.ipynb b/docs/examples/deprecated/PTI_experiment/PTI_full_FOV_cardiac_muscle.ipynb

similarity index 100%

rename from examples/documentation/PTI_experiment/PTI_full_FOV_cardiac_muscle.ipynb

rename to docs/examples/deprecated/PTI_experiment/PTI_full_FOV_cardiac_muscle.ipynb

diff --git a/examples/documentation/PTI_experiment/PTI_full_FOV_cardiomyocyte_infected_1.ipynb b/docs/examples/deprecated/PTI_experiment/PTI_full_FOV_cardiomyocyte_infected_1.ipynb

similarity index 100%

rename from examples/documentation/PTI_experiment/PTI_full_FOV_cardiomyocyte_infected_1.ipynb

rename to docs/examples/deprecated/PTI_experiment/PTI_full_FOV_cardiomyocyte_infected_1.ipynb

diff --git a/examples/documentation/PTI_experiment/PTI_full_FOV_cardiomyocyte_infected_2.ipynb b/docs/examples/deprecated/PTI_experiment/PTI_full_FOV_cardiomyocyte_infected_2.ipynb

similarity index 100%

rename from examples/documentation/PTI_experiment/PTI_full_FOV_cardiomyocyte_infected_2.ipynb

rename to docs/examples/deprecated/PTI_experiment/PTI_full_FOV_cardiomyocyte_infected_2.ipynb

diff --git a/examples/documentation/PTI_experiment/PTI_full_FOV_cardiomyocyte_mock.ipynb b/docs/examples/deprecated/PTI_experiment/PTI_full_FOV_cardiomyocyte_mock.ipynb

similarity index 100%

rename from examples/documentation/PTI_experiment/PTI_full_FOV_cardiomyocyte_mock.ipynb

rename to docs/examples/deprecated/PTI_experiment/PTI_full_FOV_cardiomyocyte_mock.ipynb

diff --git a/examples/documentation/PTI_experiment/PTI_full_FOV_human_uterus.ipynb b/docs/examples/deprecated/PTI_experiment/PTI_full_FOV_human_uterus.ipynb

similarity index 100%

rename from examples/documentation/PTI_experiment/PTI_full_FOV_human_uterus.ipynb

rename to docs/examples/deprecated/PTI_experiment/PTI_full_FOV_human_uterus.ipynb

diff --git a/examples/documentation/PTI_experiment/PTI_full_FOV_mouse_brain_aco.ipynb b/docs/examples/deprecated/PTI_experiment/PTI_full_FOV_mouse_brain_aco.ipynb

similarity index 100%

rename from examples/documentation/PTI_experiment/PTI_full_FOV_mouse_brain_aco.ipynb

rename to docs/examples/deprecated/PTI_experiment/PTI_full_FOV_mouse_brain_aco.ipynb

diff --git a/examples/documentation/PTI_experiment/README.md b/docs/examples/deprecated/PTI_experiment/README.md

similarity index 100%

rename from examples/documentation/PTI_experiment/README.md

rename to docs/examples/deprecated/PTI_experiment/README.md

diff --git a/examples/documentation/QLIPP_experiment/2D_QLIPP_recon_experiment.ipynb b/docs/examples/deprecated/QLIPP_experiment/2D_QLIPP_recon_experiment.ipynb

similarity index 100%

rename from examples/documentation/QLIPP_experiment/2D_QLIPP_recon_experiment.ipynb

rename to docs/examples/deprecated/QLIPP_experiment/2D_QLIPP_recon_experiment.ipynb

diff --git a/examples/documentation/QLIPP_experiment/3D_QLIPP_recon_experiment.ipynb b/docs/examples/deprecated/QLIPP_experiment/3D_QLIPP_recon_experiment.ipynb

similarity index 100%

rename from examples/documentation/QLIPP_experiment/3D_QLIPP_recon_experiment.ipynb

rename to docs/examples/deprecated/QLIPP_experiment/3D_QLIPP_recon_experiment.ipynb

diff --git a/examples/documentation/fluorescence_deconvolution/fluorescence_deconv.ipynb b/docs/examples/deprecated/fluorescence_deconvolution/fluorescence_deconv.ipynb

similarity index 100%

rename from examples/documentation/fluorescence_deconvolution/fluorescence_deconv.ipynb

rename to docs/examples/deprecated/fluorescence_deconvolution/fluorescence_deconv.ipynb

diff --git a/examples/maintenance/PTI_simulation/PTI_Simulation_Forward_2D3D.py b/docs/examples/maintenance/PTI_simulation/PTI_Simulation_Forward_2D3D.py

similarity index 98%

rename from examples/maintenance/PTI_simulation/PTI_Simulation_Forward_2D3D.py

rename to docs/examples/maintenance/PTI_simulation/PTI_Simulation_Forward_2D3D.py

index 85d05f89..41831ca2 100644

--- a/examples/maintenance/PTI_simulation/PTI_Simulation_Forward_2D3D.py

+++ b/docs/examples/maintenance/PTI_simulation/PTI_Simulation_Forward_2D3D.py

@@ -9,9 +9,12 @@

# density and anisotropy," bioRxiv 2020.12.15.422951 (2020).``` #

####################################################################

+from pathlib import Path

+

import matplotlib.pyplot as plt

import numpy as np

from numpy.fft import fftshift

+from platformdirs import user_data_dir

from waveorder import optics, util, waveorder_simulator

from waveorder.visuals import jupyter_visuals

@@ -481,8 +484,8 @@

# #################################

# Save simulations

-

-output_dir = "./"

+temp_dirpath = Path(user_data_dir("PTI_simulation"))

+temp_dirpath.mkdir(parents=True, exist_ok=True)

if sample_type == "3D":

output_file = "PTI_simulation_data_NA_det_147_NA_illu_140_3D_spoke_discrete_no_1528_ne_1553_no_noise_Born"

@@ -491,8 +494,10 @@

else:

print("sample_type needs to be 2D or 3D.")

+output_path = temp_dirpath / output_file

+

np.savez(

- output_dir + output_file,

+ output_path,

I_meas=I_meas_noise,

lambda_illu=lambda_illu,

n_media=n_media,

diff --git a/examples/maintenance/PTI_simulation/PTI_Simulation_Recon2D.py b/docs/examples/maintenance/PTI_simulation/PTI_Simulation_Recon2D.py

similarity index 97%

rename from examples/maintenance/PTI_simulation/PTI_Simulation_Recon2D.py

rename to docs/examples/maintenance/PTI_simulation/PTI_Simulation_Recon2D.py

index 646cbb2b..ffadd88b 100644

--- a/examples/maintenance/PTI_simulation/PTI_Simulation_Recon2D.py

+++ b/docs/examples/maintenance/PTI_simulation/PTI_Simulation_Recon2D.py

@@ -9,17 +9,23 @@

# density and anisotropy," bioRxiv 2020.12.15.422951 (2020).``` #

####################################################################

+from pathlib import Path

+

import matplotlib.pyplot as plt

import numpy as np

from numpy.fft import fftshift

+from platformdirs import user_data_dir

from waveorder import optics, waveorder_reconstructor

from waveorder.visuals import jupyter_visuals

## Initialization

## Load simulated images and parameters

-

-file_name = "./PTI_simulation_data_NA_det_147_NA_illu_140_2D_spoke_discrete_no_1528_ne_1553_no_noise_Born.npz"

+temp_dirpath = Path(user_data_dir("PTI_simulation"))

+file_name = (

+ temp_dirpath

+ / "PTI_simulation_data_NA_det_147_NA_illu_140_2D_spoke_discrete_no_1528_ne_1553_no_noise_Born.npz"

+)

array_loaded = np.load(file_name)

list_of_array_names = sorted(array_loaded)

diff --git a/examples/maintenance/PTI_simulation/PTI_Simulation_Recon3D.py b/docs/examples/maintenance/PTI_simulation/PTI_Simulation_Recon3D.py

similarity index 98%

rename from examples/maintenance/PTI_simulation/PTI_Simulation_Recon3D.py

rename to docs/examples/maintenance/PTI_simulation/PTI_Simulation_Recon3D.py

index 115458e0..8dacbc90 100644

--- a/examples/maintenance/PTI_simulation/PTI_Simulation_Recon3D.py

+++ b/docs/examples/maintenance/PTI_simulation/PTI_Simulation_Recon3D.py

@@ -8,17 +8,23 @@

# "uPTI: uniaxial permittivity tensor imaging of intrinsic #

# density and anisotropy," bioRxiv 2020.12.15.422951 (2020).``` #

####################################################################

+from pathlib import Path

+

import matplotlib.pyplot as plt

import numpy as np

from numpy.fft import fftshift

+from platformdirs import user_data_dir

from waveorder import optics, waveorder_reconstructor

from waveorder.visuals import jupyter_visuals

## Initialization

## Load simulated images and parameters

-

-file_name = "./PTI_simulation_data_NA_det_147_NA_illu_140_2D_spoke_discrete_no_1528_ne_1553_no_noise_Born.npz"

+temp_dirpath = Path(user_data_dir("PTI_simulation"))

+file_name = (

+ temp_dirpath

+ / "PTI_simulation_data_NA_det_147_NA_illu_140_2D_spoke_discrete_no_1528_ne_1553_no_noise_Born.npz"

+)

array_loaded = np.load(file_name)

list_of_array_names = sorted(array_loaded)

diff --git a/examples/maintenance/PTI_simulation/PTI_formulation.html b/docs/examples/maintenance/PTI_simulation/PTI_formulation.html

similarity index 100%

rename from examples/maintenance/PTI_simulation/PTI_formulation.html

rename to docs/examples/maintenance/PTI_simulation/PTI_formulation.html

diff --git a/examples/maintenance/PTI_simulation/README.md b/docs/examples/maintenance/PTI_simulation/README.md

similarity index 100%

rename from examples/maintenance/PTI_simulation/README.md

rename to docs/examples/maintenance/PTI_simulation/README.md

diff --git a/examples/maintenance/QLIPP_simulation/2D_QLIPP_forward.py b/docs/examples/maintenance/QLIPP_simulation/2D_QLIPP_forward.py

similarity index 94%

rename from examples/maintenance/QLIPP_simulation/2D_QLIPP_forward.py

rename to docs/examples/maintenance/QLIPP_simulation/2D_QLIPP_forward.py

index fd7b2c41..43845335 100644

--- a/examples/maintenance/QLIPP_simulation/2D_QLIPP_forward.py

+++ b/docs/examples/maintenance/QLIPP_simulation/2D_QLIPP_forward.py

@@ -10,9 +10,12 @@

#####################################################################################################

+from pathlib import Path

+

import matplotlib.pyplot as plt

import numpy as np

from numpy.fft import fftshift

+from platformdirs import user_data_dir

from waveorder import optics, util, waveorder_simulator

from waveorder.visuals import jupyter_visuals

@@ -103,7 +106,9 @@

).astype("float64")

# Save simulation

-output_file = "./2D_QLIPP_simulation.npz"

+temp_dirpath = Path(user_data_dir("QLIPP_simulation"))

+temp_dirpath.mkdir(parents=True, exist_ok=True)

+output_file = temp_dirpath / "2D_QLIPP_simulation.npz"

np.savez(

output_file,

I_meas=I_meas_noise,

diff --git a/examples/maintenance/QLIPP_simulation/2D_QLIPP_recon.py b/docs/examples/maintenance/QLIPP_simulation/2D_QLIPP_recon.py

similarity index 96%

rename from examples/maintenance/QLIPP_simulation/2D_QLIPP_recon.py

rename to docs/examples/maintenance/QLIPP_simulation/2D_QLIPP_recon.py

index f8a8583a..2b49bd6c 100644

--- a/examples/maintenance/QLIPP_simulation/2D_QLIPP_recon.py

+++ b/docs/examples/maintenance/QLIPP_simulation/2D_QLIPP_recon.py

@@ -10,8 +10,11 @@

# eLife 9:e55502 (2020).``` #

#####################################################################################################

+from pathlib import Path

+

import matplotlib.pyplot as plt

import numpy as np

+from platformdirs import user_data_dir

from waveorder import waveorder_reconstructor

from waveorder.visuals import jupyter_visuals

@@ -19,8 +22,8 @@

# ### Load simulated data

# Load simulations

-

-file_name = "./2D_QLIPP_simulation.npz"

+temp_dirpath = Path(user_data_dir("QLIPP_simulation"))

+file_name = temp_dirpath / "2D_QLIPP_simulation.npz"

array_loaded = np.load(file_name)

list_of_array_names = sorted(array_loaded)

diff --git a/examples/maintenance/README.md b/docs/examples/maintenance/README.md

similarity index 100%

rename from examples/maintenance/README.md

rename to docs/examples/maintenance/README.md

diff --git a/examples/models/README.md b/docs/examples/models/README.md

similarity index 100%

rename from examples/models/README.md

rename to docs/examples/models/README.md

diff --git a/examples/models/inplane_oriented_thick_pol3d.py b/docs/examples/models/inplane_oriented_thick_pol3d.py

similarity index 100%

rename from examples/models/inplane_oriented_thick_pol3d.py

rename to docs/examples/models/inplane_oriented_thick_pol3d.py

diff --git a/examples/models/inplane_oriented_thick_pol3d_vector.py b/docs/examples/models/inplane_oriented_thick_pol3d_vector.py

similarity index 100%

rename from examples/models/inplane_oriented_thick_pol3d_vector.py

rename to docs/examples/models/inplane_oriented_thick_pol3d_vector.py

diff --git a/examples/models/isotropic_fluorescent_thick_3d.py b/docs/examples/models/isotropic_fluorescent_thick_3d.py

similarity index 100%

rename from examples/models/isotropic_fluorescent_thick_3d.py

rename to docs/examples/models/isotropic_fluorescent_thick_3d.py

diff --git a/examples/models/isotropic_thin_3d.py b/docs/examples/models/isotropic_thin_3d.py

similarity index 100%

rename from examples/models/isotropic_thin_3d.py

rename to docs/examples/models/isotropic_thin_3d.py

diff --git a/examples/models/phase_thick_3d.py b/docs/examples/models/phase_thick_3d.py

similarity index 100%

rename from examples/models/phase_thick_3d.py

rename to docs/examples/models/phase_thick_3d.py

diff --git a/examples/visuals/plot_greens_tensor.py b/docs/examples/visuals/plot_greens_tensor.py

similarity index 100%

rename from examples/visuals/plot_greens_tensor.py

rename to docs/examples/visuals/plot_greens_tensor.py

diff --git a/examples/visuals/plot_vector_transfer_function_support.py b/docs/examples/visuals/plot_vector_transfer_function_support.py

similarity index 100%

rename from examples/visuals/plot_vector_transfer_function_support.py

rename to docs/examples/visuals/plot_vector_transfer_function_support.py

diff --git a/docs/images/HSV_legend.png b/docs/images/HSV_legend.png

new file mode 100644

index 00000000..5de940a4

Binary files /dev/null and b/docs/images/HSV_legend.png differ

diff --git a/docs/images/JCh_Color_legend.png b/docs/images/JCh_Color_legend.png

new file mode 100644

index 00000000..eb53f5fe

Binary files /dev/null and b/docs/images/JCh_Color_legend.png differ

diff --git a/docs/images/JCh_legend.png b/docs/images/JCh_legend.png

new file mode 100644

index 00000000..8af5efc9

Binary files /dev/null and b/docs/images/JCh_legend.png differ

diff --git a/docs/images/acq_finished.png b/docs/images/acq_finished.png

new file mode 100644

index 00000000..cb07c394

Binary files /dev/null and b/docs/images/acq_finished.png differ

diff --git a/docs/images/acquire_buttons.png b/docs/images/acquire_buttons.png

new file mode 100644

index 00000000..6e001d46

Binary files /dev/null and b/docs/images/acquire_buttons.png differ

diff --git a/docs/images/acquisition_settings.png b/docs/images/acquisition_settings.png

new file mode 100644

index 00000000..9a4877bd

Binary files /dev/null and b/docs/images/acquisition_settings.png differ

diff --git a/docs/images/advanced.png b/docs/images/advanced.png

new file mode 100644

index 00000000..ed658392

Binary files /dev/null and b/docs/images/advanced.png differ

diff --git a/docs/images/cap_bg.png b/docs/images/cap_bg.png

new file mode 100644

index 00000000..6dfbf70f

Binary files /dev/null and b/docs/images/cap_bg.png differ

diff --git a/docs/images/cli_structure.png b/docs/images/cli_structure.png

new file mode 100644

index 00000000..0ddb1dd1

Binary files /dev/null and b/docs/images/cli_structure.png differ

diff --git a/docs/images/comms_video_screenshot.png b/docs/images/comms_video_screenshot.png

new file mode 100644

index 00000000..98607aa3

Binary files /dev/null and b/docs/images/comms_video_screenshot.png differ

diff --git a/docs/images/connect_to_mm.png b/docs/images/connect_to_mm.png

new file mode 100644

index 00000000..8e8fe256

Binary files /dev/null and b/docs/images/connect_to_mm.png differ

diff --git a/docs/images/create_group.png b/docs/images/create_group.png

new file mode 100644

index 00000000..5719ff61

Binary files /dev/null and b/docs/images/create_group.png differ

diff --git a/docs/images/create_group_voltage.png b/docs/images/create_group_voltage.png

new file mode 100644

index 00000000..e97dfeed

Binary files /dev/null and b/docs/images/create_group_voltage.png differ

diff --git a/docs/images/create_preset.png b/docs/images/create_preset.png

new file mode 100644

index 00000000..6b78e125

Binary files /dev/null and b/docs/images/create_preset.png differ

diff --git a/docs/images/create_preset_voltage.png b/docs/images/create_preset_voltage.png

new file mode 100644

index 00000000..a6a648d0

Binary files /dev/null and b/docs/images/create_preset_voltage.png differ

diff --git a/docs/images/general_reconstruction_settings.png b/docs/images/general_reconstruction_settings.png

new file mode 100644

index 00000000..9da93ff0

Binary files /dev/null and b/docs/images/general_reconstruction_settings.png differ

diff --git a/docs/images/ideal_plot.png b/docs/images/ideal_plot.png

new file mode 100644

index 00000000..8cc03620

Binary files /dev/null and b/docs/images/ideal_plot.png differ

diff --git a/docs/images/modulation.png b/docs/images/modulation.png

new file mode 100644

index 00000000..6c5e44df

Binary files /dev/null and b/docs/images/modulation.png differ

diff --git a/docs/images/no-overlay.png b/docs/images/no-overlay.png

new file mode 100644

index 00000000..cbb860cf

Binary files /dev/null and b/docs/images/no-overlay.png differ

diff --git a/docs/images/overlay-demo.png b/docs/images/overlay-demo.png

new file mode 100644

index 00000000..da49c9eb

Binary files /dev/null and b/docs/images/overlay-demo.png differ

diff --git a/docs/images/overlay.png b/docs/images/overlay.png

new file mode 100644

index 00000000..69c71358

Binary files /dev/null and b/docs/images/overlay.png differ

diff --git a/docs/images/phase_reconstruction_settings.png b/docs/images/phase_reconstruction_settings.png

new file mode 100644

index 00000000..967a77d4

Binary files /dev/null and b/docs/images/phase_reconstruction_settings.png differ

diff --git a/docs/images/poincare_swing.svg b/docs/images/poincare_swing.svg

new file mode 100644

index 00000000..d114424b

--- /dev/null

+++ b/docs/images/poincare_swing.svg

@@ -0,0 +1,535 @@

+

+

+

+

diff --git a/docs/images/reconstruction_birefriengence.png b/docs/images/reconstruction_birefriengence.png

new file mode 100644

index 00000000..20ac93b4

Binary files /dev/null and b/docs/images/reconstruction_birefriengence.png differ

diff --git a/docs/images/reconstruction_data.png b/docs/images/reconstruction_data.png

new file mode 100644

index 00000000..5a85e570

Binary files /dev/null and b/docs/images/reconstruction_data.png differ

diff --git a/docs/images/reconstruction_data_info.png b/docs/images/reconstruction_data_info.png

new file mode 100644

index 00000000..9a38b441

Binary files /dev/null and b/docs/images/reconstruction_data_info.png differ

diff --git a/docs/images/reconstruction_models.png b/docs/images/reconstruction_models.png

new file mode 100644

index 00000000..400812f9

Binary files /dev/null and b/docs/images/reconstruction_models.png differ

diff --git a/docs/images/reconstruction_queue.png b/docs/images/reconstruction_queue.png

new file mode 100644

index 00000000..2a221241

Binary files /dev/null and b/docs/images/reconstruction_queue.png differ

diff --git a/docs/images/run_calib.png b/docs/images/run_calib.png

new file mode 100644

index 00000000..f7748333

Binary files /dev/null and b/docs/images/run_calib.png differ

diff --git a/docs/images/run_port.png b/docs/images/run_port.png

new file mode 100644

index 00000000..012cb1e8

Binary files /dev/null and b/docs/images/run_port.png differ

diff --git a/docs/images/waveorder_Fig1_Overview.png b/docs/images/waveorder_Fig1_Overview.png

new file mode 100644

index 00000000..b2244e8e

Binary files /dev/null and b/docs/images/waveorder_Fig1_Overview.png differ

diff --git a/docs/images/waveorder_plugin_logo.png b/docs/images/waveorder_plugin_logo.png

new file mode 100644

index 00000000..ef09b340

Binary files /dev/null and b/docs/images/waveorder_plugin_logo.png differ

diff --git a/docs/microscope-installation-guide.md b/docs/microscope-installation-guide.md

new file mode 100644

index 00000000..e396766d

--- /dev/null

+++ b/docs/microscope-installation-guide.md

@@ -0,0 +1,100 @@

+# Microscope Installation Guide

+

+This guide will walk through a complete waveorder installation consisting of:

+1. Checking pre-requisites for compatibility.

+2. Installing Meadowlark DS5020 and liquid crystals.

+3. Installing and launching the latest stable version of `waveorder` via `pip`.

+4. Installing a compatible version of Micro-Manager and LC device drivers.

+5. Connecting `waveorder` to Micro-Manager via a `pycromanager` connection.

+

+## Compatibility Summary

+Before you start you will need to confirm that your system is compatible with the following software:

+

+| Software | Version |

+| :--- | :--- |

+| `waveorder` | 0.4.0 |

+| OS | Windows 10 |

+| Micro-Manager version | [2023-04-26 (160 MB)](https://download.micro-manager.org/nightly/2.0/Windows/MMSetup_64bit_2.0.1_20230426.exe) |

+| Meadowlark drivers | [USB driver (70 kB)](https://github.com/mehta-lab/recOrder/releases/download/0.4.0/usbdrvd.dll) |

+| Meadowlark PC software version | 1.08 |

+| Meadowlark controller firmware version | >=1.04 |

+

+## Install Meadowlark DS5020 and liquid crystals

+

+Start by installing the Meadowlark DS5020 and liquid crystals using the software on the USB stick provided by Meadowlark. You will need to install the USB drivers and CellDrive5000.

+

+**Check your installation versions** by opening CellDrive5000 and double clicking the Meadowlark Optics logo. Confirm that **"PC software version = 1.08" and "Controller firmware version >= 1.04".**

+

+If you need to change your PC software version, follow these steps:

+- From "Add and remove programs", remove CellDrive5000 and "National Instruments Software".

+- From "Device manager", open the "Meadowlark Optics" group, right click `mlousb`, click "Uninstall device", check "Delete the driver software for this device", and click "Uninstall". Uninstall `Meadowlark Optics D5020 LC Driver` following the same steps.

+- Using the USB stick provided by Meadowlark, reinstall the USB drivers and CellDrive5000.

+

+## Install waveorder software

+

+(Optional but recommended) install [anaconda](https://www.anaconda.com/products/distribution) and create a virtual environment

+```

+conda create -y -n waveorder python=3.10

+conda activate waveorder

+```

+

+Install `waveorder` with acquisition dependencies (napari and pycro-manager):

+```

+pip install waveorder[all]

+```

+Check your installation:

+```

+napari -w waveorder

+```

+should launch napari with the waveorder plugin (may take 15 seconds on a fresh installation).

+

+## Install and configure Micro-Manager

+

+Download and install [`Micro-Manager 2.0` nightly build `20230426` (~150 MB link).](https://download.micro-manager.org/nightly/2.0/Windows/MMSetup_64bit_2.0.1_20230426.exe)

+

+**Note:** We have tested waveorder with `20230426`, but most features will work with newer builds. We recommend testing a minimal installation with `20230426` before testing with a different nightly build or additional device drivers.

+

+Before launching Micro-Manager, download the [USB driver](https://github.com/mehta-lab/recOrder/releases/download/0.4.0rc0/usbdrvd.dll) and place this file into your Micro-Manager folder (likely `C:\Program Files\Micro-Manager` or similar).

+

+Launch Micro-Manager, open `Devices > Hardware Configuration Wizard...`, and add the `MeadowlarkLC` device to your configuration. Confirm your installation by opening `Devices > Device Property Browser...` and confirming that `MeadowlarkLC` properties appear.

+

+**Upgrading users:** you will need to reinstall the Meadowlark device to your Micro-Manager configuration file, because the device driver's name has changed to from `MeadowlarkLcOpenSource` to `MeadowlarkLC`.

+

+### Option 1 (recommended): Voltage-mode calibration installation