Automate docstring and unit test creation for Python projects using LLMs. Provides both a CLI and a web UI so teams can quickly increase documentation quality and test coverage with minimal manual work.

- Safe docstring insertion (Injects only the docstring)

- Mirrors your project tree into

tests/and creates pytest-style test files. - CLI (Typer) + Web UI (Gradio).

- Modular LLM execution layer; swap or add models easily.

- Configurable: select models and specific functions/classes.

- Save developer time by auto-creating high-quality docstrings.

- Produce realistic unit tests that mirror your project structure.

- Keep your codebase well-documented and more maintainable, accelerating onboarding and code reviews.

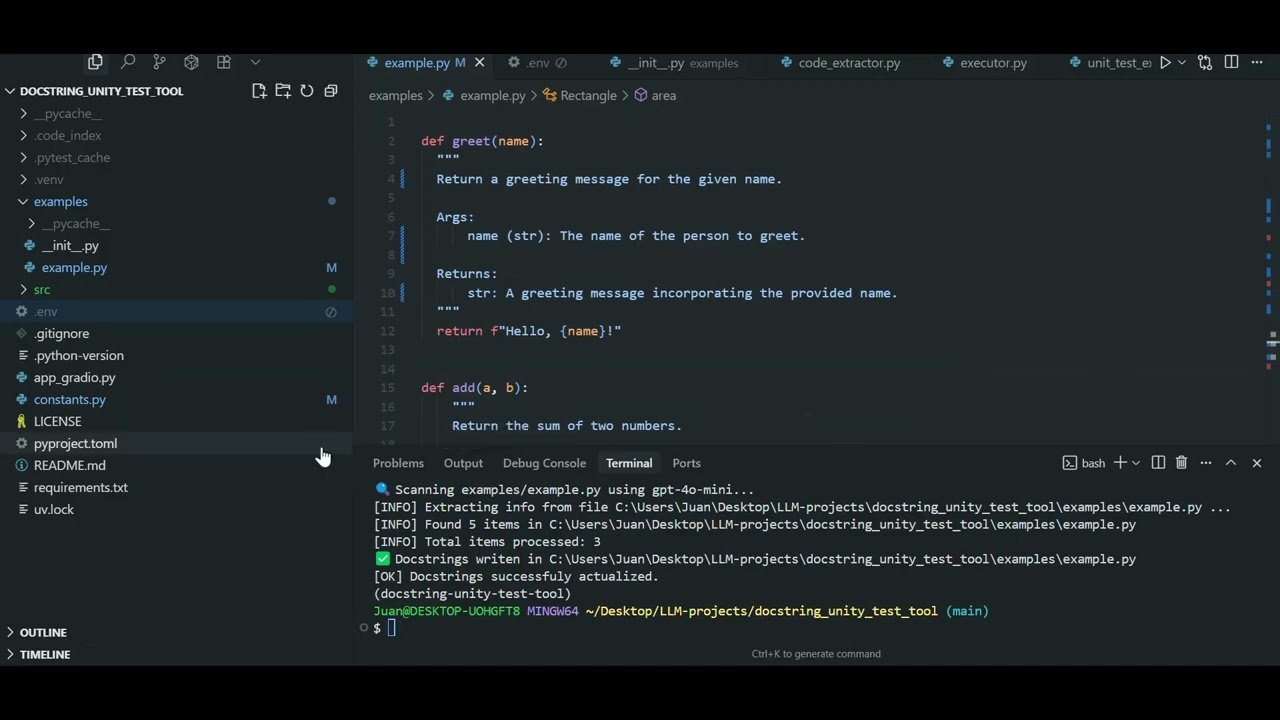

Screenshot of the Docstring Interface

Screenshot of the Test Interface

Click the image to watch a short demo of the CLI on YouTube

Run the web UI:

python app_gradio.pyGenerate docstrings from the CLI:

python -m src.cli docstring generate <folder_path> Generate unit tests:

python -m src.cli unit_test generate <folder_path> <project_path>python -m src.cli docstring generate <path> [options]Options

| Option | Description |

|---|---|

<path> |

File or folder to scan. |

--model, -m |

Model to use (default: gpt-4o-mini). |

--names, -n |

Comma-separated list of names to process. |

--project, -p |

Root path of the project for indexing. |

Example

python -m src.cli docstring generate src/utils -m openai/gpt-oss-120bpython -m src.cli unit_test generate <path> <project_path> [options]Options

| Option | Description |

|---|---|

<path> |

File or folder to analyze. |

<project_path> |

Root path of the project. |

--model, -m |

Model to use (default: openai/gpt-oss-120b). |

--names, -n |

Specific function/class names. |

Example

python -m src.cli unit_test generate src/utils /home/user/project -n add,subtractThis project was inspired by the Udemy course "AI Engineer Core Track: LLM Engineering, RAG, QLoRA, Agents" by Ed Donner.

It was developed as a portfolio project to demonstrate practical skills in LLM-based automation, agent design, and prompt engineering.

The implementation uses only the official OpenAI SDK, showcasing a low-dependency, clean, and extensible approach to integrating language models into developer tools.

Unix / macOS:

python -m venv .venv

source .venv/bin/activate

pip install -r requirements.txtWindows (PowerShell):

python -m venv .venv

.venv\Scripts\Activate.ps1

pip install -r requirements.txtIf you prefer the pyproject.toml workflow, you can also use uv.

This tool supports multiple LLM providers. You'll need to set up API keys in your environment:

OPENAI_API_KEY=your-key-here # For gpt-4o-mini

GROQ_API_KEY=your-key-here # For Llama and GPT-OSS modelsSupported models:

- OpenAI:

gpt-4o-mini - Groq:

meta-llama/llama-4-scout-17b-16e-instructopenai/gpt-oss-20bopenai/gpt-oss-120b

Select models via --model flag in CLI or environment variables.

The selection of models can be easily customizable by modifing the constants.py file.

- Scanner: parses the codebase and finds functions/classes.

- LLM agent: generates docstrings or test code using prompts.

- Writer: writes docstrings into each source file and generates mirrored test files under tests/.

This project uses a modular agent architecture where agents process Python files one at a time and return structured items (list of DocstringOutput and UnitTestOutput objects) containing the generated content and metadata.

-

Docstring Agent (single-agent flow)

- Processes one file at a time using

CodeExtractorTool - For each function/class found, generates a docstring following PEP 257 conventions.

- Returns a list of

DocstringOutputgenerated content.

- Processes one file at a time using

-

Unit Test Pipeline (two-agent flow: Generator → Reviewer)

- Generator agent:

- Processes one source file at a time

- Returns test

UnitTestOutputobjects with generated pytest functions - Includes necessary imports and fixtures in metadata

- Reviewer agent:

- Takes the generated file content.

- Validates tests, imports, and assertions

- Returns fixed test code or validation report

- Generator agent:

- IndexScanner finds Python files and extracts

CodeItemobjects - Agents process files one by one:

module.py → [CodeItem1, CodeItem2, ...] → Generator → Reviewer → Final Items - Review (only for docstring) and write.

- Granular control: accept/reject changes per function

- Better context: each item includes its imports and dependencies

- Efficient processing: only changed files are reprocessed

- Safe execution: process one file at a time, handle errors gracefully

- Models are configurable via CLI flags or in the gradio app.

- Each agent can use a different model (e.g., faster model for review)

- Processing can be parallelized across files (but serial within each file)

python -m pytest tests/src/— main implementation modules (CLI, generators, executors, agents).examples/— sample usage and quick demos.tests/— unit tests (auto-generated tests appear here).app_gradio.py— launch Gradio web UI.

This project includes an incremental project indexer that scans your Python codebase and extracts "code items" (functions, classes, imports, etc.) for fast lookup and context. The indexer is implemented in src/core_base/indexer/project_indexer.py and provides an efficient workflow for large repositories.

This project is licensed under the MIT License — see the LICENSE file in this repository for details.