This repository contains our solution for the "Image Matching Challenge 2024 - Hexathlon" Kaggle competition, where we achieved a 🥈silver medal.

We retrieve images from various 3D scene datasets using pre-trained EfficientNet-B6 & B7 models from ImageNet to extract image features. The cosine distance metric is used to rank images based on similarity, selecting the top n images for each scene.

For the retrieved images, we employ two parallel pipelines to extract and match keypoints:

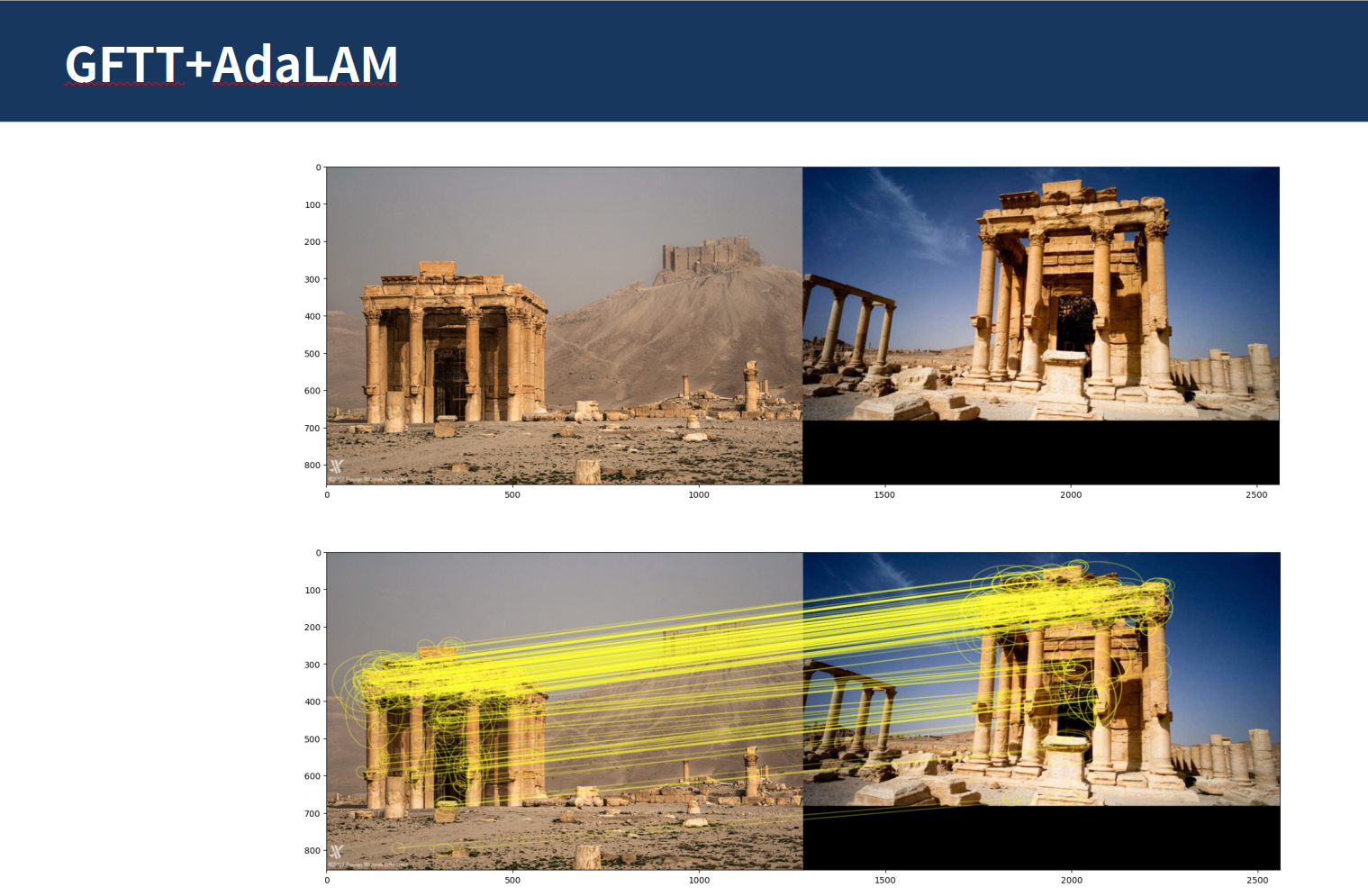

- Corner Detection with AdaLAM: We use Kornia's CornerGFTT feature detector to extract keypoints and apply the AdaLAM algorithm for robust feature matching. Successful match pairs are stored.

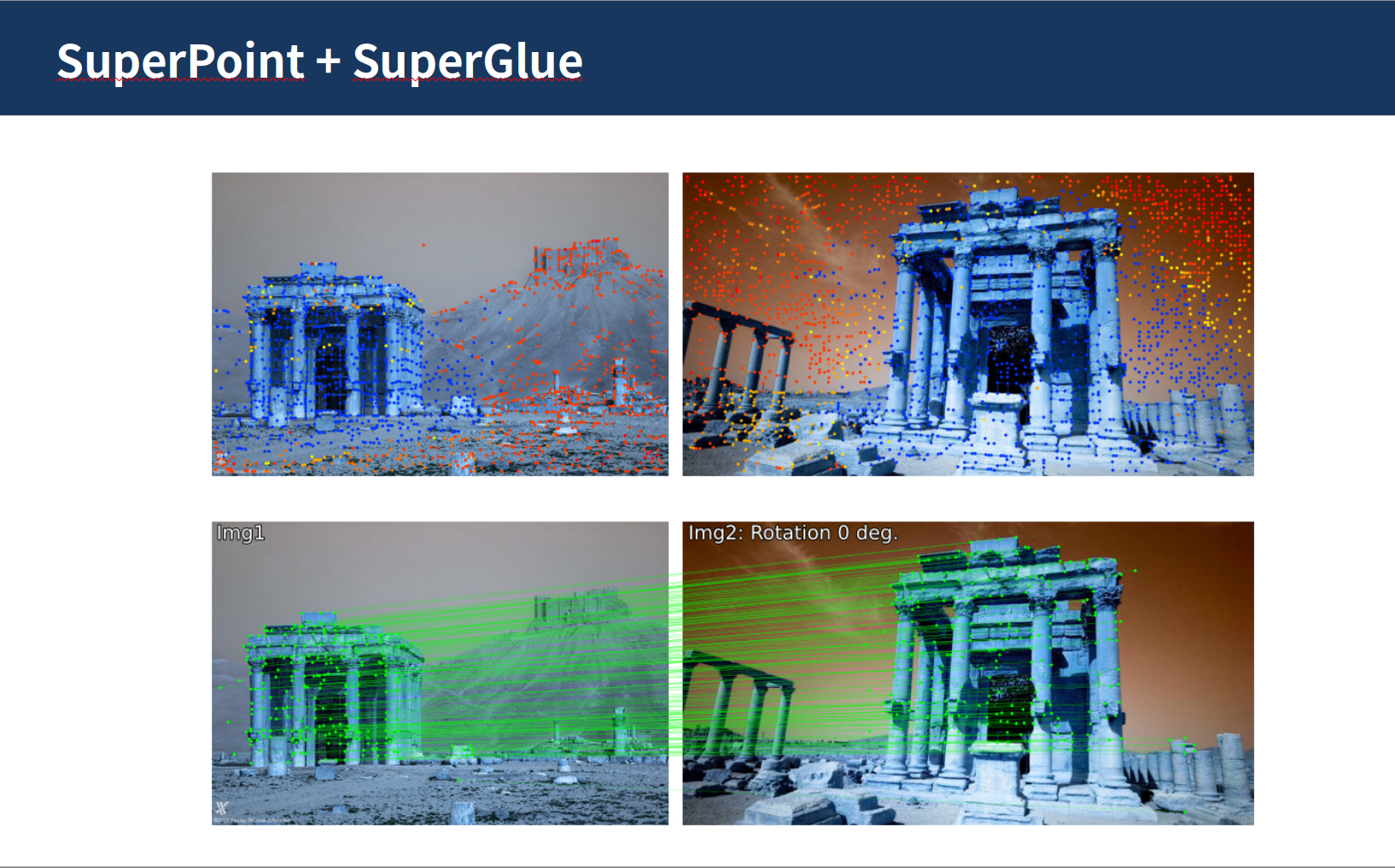

- SuperPoint & SuperGlue: We extract keypoints using SuperPoint and perform feature matching with SuperGlue, retaining the valid match pairs.

We merge the successful match pairs from both pipelines, remove duplicates, and use PyCOLMAP to compute the final 3D spatial relationships, including camera positions and pose estimation.

If you find our work helpful, please consider citing:

@inproceedings{sarlin20superglue,

author = {Paul-Edouard Sarlin and

Daniel DeTone and

Tomasz Malisiewicz and

Andrew Rabinovich},

title = {{SuperGlue}: Learning Feature Matching with Graph Neural Networks},

booktitle = {CVPR},

year = {2020},

url = {https://arxiv.org/abs/1911.11763}

}

Appreciate your interest in our work!