-

Notifications

You must be signed in to change notification settings - Fork 2.6k

Fix the CI checks

- About the CI checks

- What to do if a check fails

- Fixing the checks

- Building the unit tests locally

- Running the tests

- Debugging the tests

When you submit a PR (pull request) it triggers several automated build workflows on GitHub Actions.

Each workflow consists of one or more jobs. For example, the vtests workflow has 4 jobs.

Workflow jobs are the CI (continuous integration) checks that you see at the bottom of each PR page.

Clicking Details next to a job reveals its build log, as well as a summary for the entire workflow.

The summary is where you'll find any downloadable artifacts (i.e. files) that were generated during the build.

| CI check | Confirms that... | Artifacts produced |

|---|---|---|

| Platform (Windows, macOS, Linux) |

The code compiles on each platform. | Executables that testers can download and run on their machines. |

| Configuration (Without Qt) |

The code compiles in a specific configuration (i.e. with Qt support disabled). | None. |

| utests (unit tests) | The program behaves as expected (e.g. when saving and loading files). | None. |

| vtests (visual tests) | Engraving hasn't changed. | Before and after images (only if changes are found). |

| codestyle | Your code follows the CodeGuidelines. | None. |

Here are some questions you need to consider to determine what action needs to be taken, if any.

Checks can fail for a variety of reasons, but some reasons are only possible for certain checks.

| Failure reason | Checks affected | Description of problem | Resolution |

|---|---|---|---|

| Script error | All | An error in the workflow YAML file or the shell scripts it calls. | Fix the script. |

| Compilation error | All except codestyle | The code failed to compile due errors in the C++ code or CMake files. | Fix the code. |

| Runtime crash | utests, vtests | Your compiled C++ code was executed (e.g. to run tests) and it crashed. | Run tests locally in a debugger. Fix code. |

| Failed test | utests, vtests, codestyle | Tests were completed but your code didn't pass. | Fix code or update reference files. |

| Timeout | All | A check took too long and was timed out. Time limits can be specified in workflow files, and GitHub has overall limits. | Improve code or increase timeout. |

| Server error | All | A problem occurred on the build server that is beyond our control. Examples: power loss, build tool crash, network problem (e.g. a dependency failed to download, an artifact failed to upload), etc. | Run check again. |

The most common errors are compilation errors and failed tests, but it's worth remembering that other errors are possible.

Failed tests can sometimes be desirable. For example, if the purpose of your PR was to change program behaviour or engraving then you should expect the utests and/or vtests to fail. But did they fail in the way you were expecting? All other kinds of failure are undesirable.

Click the Details link next to the failed job to view its build log. The log shows the status for each step within the job.

The steps are different for each job. Here are the steps for the run_tests job within the utests workflow:

Seeing which step failed should narrow down the possible causes of failure.

For example, the check in the image failed at the Run tests step. This rules out any compilation errors or network problems, because nothing is compiled, downloaded, or uploaded during this step. It also wasn't a timeout because there are no timeouts set for this workflow, and the total elapsed time of 26 minutes is well below the GitHub limit. The most likely cause is failed tests, but we can't yet rule out a runtime crash, script error, or server error.

Expand the log for the failed step (it might be expanded already).

Look for error messages. There will probably be one right at the end of the failed section.

Note: The final error may not be the root cause of the problem, but rather a symptom of an earlier error.

In this case, the final error confirms it was failed tests that caused the check to fail. It even tells us that it was a MusicXML test that failed, but it doesn't tell us why that particular test failed.

If the final error message doesn't fully identify the problem, you must scroll up in the log until you find the earliest error message that is actually linked to the final, fatal error message. It can be helpful to search the log for likely terms.

| Failed step | Terms to search for |

|---|---|

| Build | error |

| Run tests |

failed, failure, @@ (diff marker), or the name of the test that failed (e.g. musicxml) |

| Any other step |

error, fail, unavailable, response, respond, timeout, timed out, etc. |

You can ignore warnings and main_thread errors as these wouldn't cause the check to fail.

If you get lots of unhelpful results, try a different search term.

If the utests or codestyle check fails due to failed tests, then you're looking for a region of the log that contains a diff, like this:

For the utests check, lines starting with + appear in the reference file but not in the test output. We were expecting your code to produce those lines, but it didn't.

For the codestyle check, lines starting with + were added by Uncrustify. We were expecting your code to be formatted that way, but it wasn't.

Now you should have a good idea what the problem is, but before you try to fix it, you should check other recent PRs on MuseScore's repo to see whether they are experiencing the same problem.

If other PRs have the exact same problem then the failure isn't your fault, so you can ignore it and wait for someone else to fix it, or try to fix it yourself in a separate PR.

Once the check is working again on other PRs, use these commands to re-run the checks on your current PR:

# Rebase your local branch to incorporate any fixes.

git pull --rebase upstream master

# Force push to update your PR and re-run the checks.

git push -f origin [your-branch-name]Or in GitHub Desktop:

-

Repository > Fetch.

- Downloads latest code from GitHub, but doesn't do anything with that code yet.

-

Branch > Rebase current branch and pick master.

- "This will update [your-branch-name] by applying its N commits on top of master."

- Click Rebase (and Begin rebase if it warns about force-push).

-

Repository > Force push.

- Updates the PR branch on GitHub.

Sometimes it's obvious that a problem isn't your fault because it occurs in an area of the code that you didn't touch. However, you should be aware that the code is very interconnected. For example, the above MusicXML errors were triggered by editing MuseScore's instrument data. On the face of it, MuseScore's instrument data has nothing to do with MusicXML, but it turns out that this data is included in the export, hence the tests failed.

Just because the check only fails for your PR doesn't necessarily mean it's your fault. It might be a server error, such as:

- Power loss

- Build tool crash

- Network problem (e.g. a dependency failed to download, an artifact failed to upload)

If you think it might be one of these things then you could try running the test again immediately (i.e. without editing the code).

To re-run the build, use the commands above to rebase and force-push. If there isn't any new code in master to rebase on, use these commands instead:

# Reset timestamp on last commit to current time (causes SHA to change)

git commit --amend --no-edit --reset-author

# Force push to update the PR and re-run the checks.

git push -f origin [your-branch-name]Or in GitHub Desktop:

- Under History, right-click on most recent commit > Amend commit.

- If it warns about force push, click Begin amend.

- Leave the commit title and description as they are (or change them if you want to).

- Click Amend last commit.

-

Repository > Force push.

- Updates the PR branch on GitHub.

If it fails for you again, yet succeeds for other people, then it might not be a server error after all.

Having determined what the problem is, and that it was caused by your code, the next step it to try and fix it.

Copy the error message and paste it in a search engine to find out what to do. It may help to run the script/compilation on your local machine, as this will be faster than waiting for a CI build. Of course, this only works if you have access to the same platform (Windows, macOS, or Linux) as was used for the failing build.

If the tests crash then you'll almost certainly have to compile and run them locally on your machine.

Run the tests in a debugger and set a breakpoint near to where the crash occurs. Step through lines individually until you encounter the crash and try to work out what caused it.

The build log should contain a diff. Lines starting with + were added by Uncrustify.

To fix this check, edit the diffed file. You want to add lines starting with + and remove lines starting with -.

Download the artifact from the summary page and extract the files inside. One of the files is an HTML file, which you can open in a web browser. It shows images of MuseScore's engraving with and without your changes applied.

If all of the changes are desirable, leave a comment on your PR to say so. If any of the changes are undesirable, you need to fix your code to prevent those changes from being produced, then run the check again.

The vtests check is sensitive to slight differences between before and after images, so it breaks quite regularly for reasons that are nothing to do with your code. It's always worth making sure the vtests check is working on other PRs before you spend too much time investigating why it is broken on yours.

The build log should contain a diff. Lines starting with + appear in the reference file but not in the test output, which means we were expecting your code to produce those lines, but it didn't.

If the test output is correct, modify the reference file. You want to remove lines starting with + and add lines starting with -.

If the reference file is correct, modify the C++ code. You want to generate lines starting with + and avoid generating lines starting with -.

You may find it helpful to compile and run the tests locally on your machine. The CI server isn't particularly powerful, and it does a clean build each time, which takes quite a while to complete. Your local machine is probably more powerful, and you can do incremental builds where you only compile the files that changed since the last build. This makes local builds much faster than CI builds.

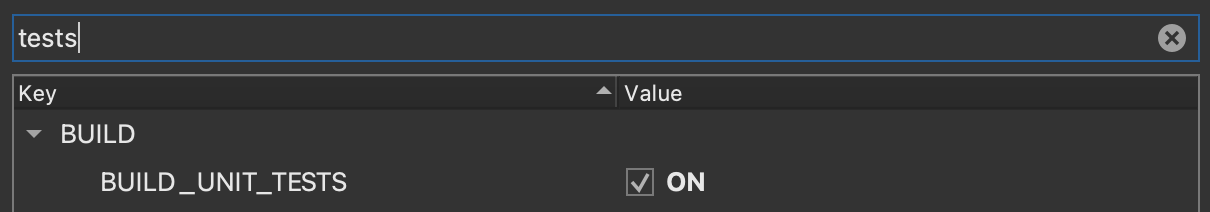

You need to make sure that the CMake option MUE_BUILD_UNIT_TESTS is set to ON (which is currently the default). The exact method of doing this depends on how you are compiling the program.

You must pass the option -DMUE_BUILD_UNIT_TESTS=ON to CMake when you compile. If you are compiling with the build.cmake script then you can do this by adding the following to build_overrides.cmake:

# somewhere in file build_overrides.cmake

list(APPEND CONFIGURE_ARGS "-DMUE_BUILD_UNIT_TESTS=ON")Delete the CMake cache (or run cmake -P build.cmake clean) and run the build again.

Go to Projects > Build (for selected kit) and type "tests" in the Filter box.

Check the box next to MUE_BUILD_UNIT_TESTS and click "Apply Configuration Changes". Run the build again.

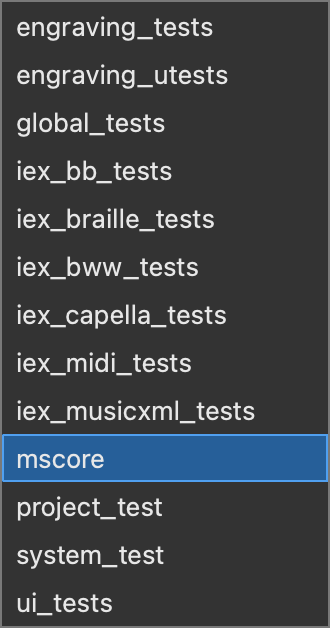

Each test is a separate executable created somewhere in the build directory:

builds/YOUR_BUILD/src/engraving/tests/engraving_tests

builds/YOUR_BUILD/src/engraving/utests/engraving_utests

builds/YOUR_BUILD/src/framework/global/tests/global_tests

Etc.

You can perform all the tests at once by running the command ctest inside the build directory, like this:

cd builds/YOUR_BUILD

ctest -j CPUS --output-on-failure # CPUS: number of logical processor cores on your machine (an integer)See ctest --help for details and other available options.

If you want to run the tests in a debugger then (I think) you have to run each test executable individually inside the debugging application otherwise ctest will swallow any crashes or breakpoints you have set up. However, you could run ctest first and then debug only the tests that fail.

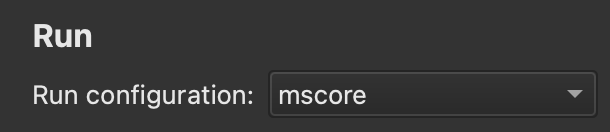

In Qt Creator it is easy to debug tests individually. Go to Projects > Run (for selected kit), and locate the dropdown "Run configuration".

If you configured with MUE_BUILD_UNIT_TESTS set to ON then the tests will be available in this dropdown.

Simply pick the test you want to run, then go to the Debug menu > Start Debugging > Start Debugging Without Deployment. Use the normal Start Debugging option instead if you haven't built the project yet, or if you've edited the code since the last build.

Testing

- Manual testing

- Automatic testing

Translation

Compilation

- Set up developer environment

- Install Qt and Qt Creator

- Get MuseScore's source code

- Install dependencies

- Compile on the command line

- Compile in Qt Creator

Beyond compiling

Misc. development

Architecture general

- Architecture overview

- AppShell

- Modularity

- Interact workflow

- Channels and Notifications

- Settings and Configuration

- Error handling

- Launcher and Interactive

- Keyboard Navigation

Audio

Engraving

- Style settings

- Working with style files

- Style parameter changes for 4.0

- Style parameter changes for 4.1

- Style parameter changes for 4.2

- Style parameter changes for 4.3

- Style parameter changes for 4.4

Extensions

- Extensions overview

- Manifest

- Forms

- Macros

- Api

- Legacy plugin API

Google Summer of Code

References